As more generative AI innovations become available, the question of what’s trustworthy becomes more acute.

So, what does that mean for admins who are responsible for influencing how generative AI shows up for humans? Let us share some context and guidance for ways to support human-generative AI interaction.

Salesforce Research & Insights recently conducted research that shows AI is more trusted and desirable when humans work in tandem with AI – the so called “human in the loop.” The team collected more than 1,000 generative AI use cases at Dreamforce and hosted deep-dive conversations with 30 Salesforce buyers, admins, and end users.

Results show customers and users do believe in the potential of AI to increase efficiency and consistency, bring inspiration and fulfillment, and improve how they organize their thoughts. But, all customers and users in the study reported that they aren’t ready to fully trust generative AI without a human at the helm.

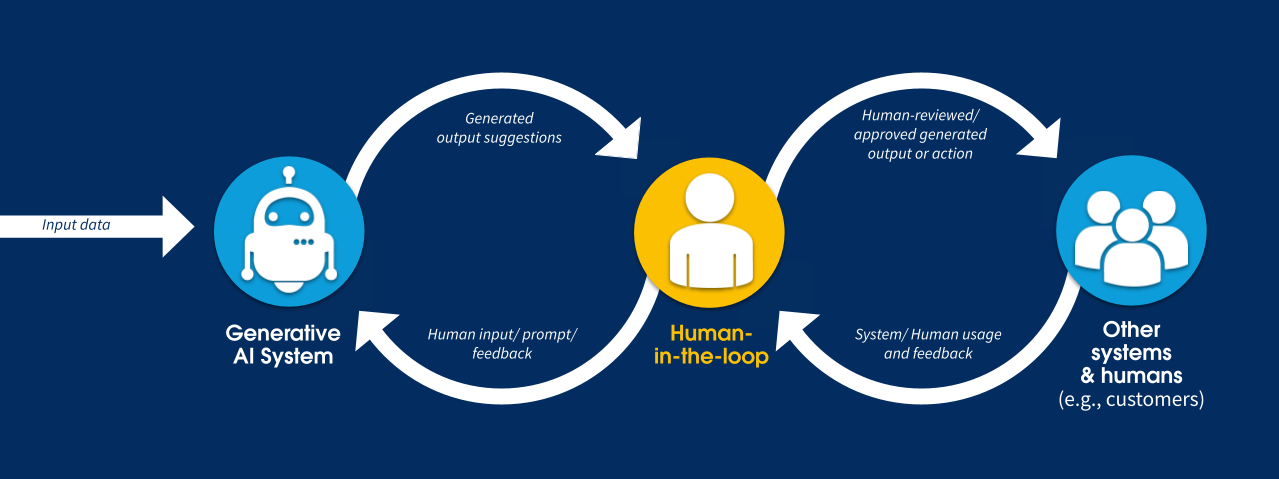

What is a ‘human in the loop’?

Originally used in military, nuclear energy, and aviation contexts, “human in the loop” (HITL) referred to the ability for humans to intervene in automated systems to prevent disasters. In the context of generative AI, HITL is about giving humans an opportunity to review and act upon AI-generated content.

Our recent studies reveal, however, that in many cases, humans — employees at your organization or business — should be even more thoughtfully and meaningfully embedded into the process as the “human-at-the-helm.”

Human involvement increases quality and accuracy and is the primary driver for trust

An important learning from this research is that customers and users understand generative AI isn’t perfect. It can be unpredictable, inaccurate, and at times can make things up. Not knowing the data source or the completeness and quality of that data also degrades customers’ and users’ trust. Not to mention, the learning curve for how to most-effectively prompt generative AI is steep.

Without effective prompts, users recognize that their chances of achieving quality outputs are limited. When it comes to finessing outputs, customers and users believe that people bring skills, expertise, and “humanness” that generative AI can’t.

For nearly all use cases, customers and users believe there needs to be at least an initial trust-building period that involves high human touch. Of the 90 use cases collected in one research session, only two were appropriate for full autonomy.

HITL is especially important for compound (i.e., “Do X, based on W” or “Do X, then do Y”), macro-level (e.g., “define a sales forecasting strategy”), or high risk tasks (e.g., “email a high-value customer” or “diagnose an illness”).

Situations calling for increased human touch can include:

- When the data is source is unknown or untrusted.

- When the output is for external use.

- When using the output as a final deliverable.

- When using the output to impersonate (e.g., a script for a sales call) rather than inform (e.g., information about a prospect to consume ahead of a cold call).

- When the user is still learning prompt writing, since poor prompts lead to more effort to finesse outputs

- For personal, bespoke, persuasive outputs (e.g., a marketing campaign).

- When inputs needed to achieve desired output are qualitative, nuanced, implicit (not codified).

- When inputs needed are recent (not yet in the dataset).

- When users have experienced generative AI errors before (low-trust environments).

- When stakes are high (e.g., high financial impact, broad reach, risk to end consumer, legal risk, when it’s not socially acceptable to use AI).

- When humans are doing the job well today (and the tool is meant to supercharge those humans, not fill a gap in human resources).

In these situations, more deeply involving a human in the decision-making can help us achieve responsible AI by:

- improving accuracy and quality – humans can catch errors, add context or expertise, and infuse human tone;

- ensuring safety – humans can mitigate ethical and legal risks;

- and promoting user empowerment.

Human input spurs continuous improvement of the generative AI system and helps customers and users build trust with the technology.

Building trust takes time

Trust is the most critical aspect of generative AI, but trust is not a one-time conversation. It’s a journey that takes time to cultivate but can be quick to break. Nearly all research participants shared experiences of trusting generative AI that ultimately failed them. Customers hope for a reliable pattern of accuracy and quality over time.

To cultivate trust over time and help users build confidence in AI technology, our research has shown generative AI systems should be designed to keep humans at the helm — especially during an initial trust-building or onboarding period — and enable continuous learning for both the user and the machine.

Through these experiences:

- Thoughtfully involve human judgment and expertise;

- help the human understand the system by being transparent and honest about how the system works and what its limitations are;

- afford users the opportunity to give feedback to the system;

- and design the system to take in signals, make sense of users’ needs, and guide users in how to effectively collaborate with the technology.

Uphold the value proposition of efficiency

Most compelling to research participants is generative AI’s promise to reduce time on task. Customers warned that they might abandon or avoid systems that undermine this value proposition. The research revealed several ways to ensure efficiency:

- To minimize effort editing outputs: guide users in writing effective prompts. Help them identify where and how to add their expertise.

- To limit “swivel-chairing” between tools: support users in micro-editing outputs from within the generative AI system. Example: give users a way to choose from output options, re-prompt, or tweak specific words or phrases in-app.

Motivate over mandate

Unavoidable and seemingly arbitrary friction, without an explanation of the benefits or reasons for the friction, can cause frustration. When possible, inspire users to participate, rather than requiring them to do so. To drive willing human participation, generative AI systems should be designed to:

- Provide inspiration. Example: offer output options to choose from.

- Foster exploration and experimentation. Example: provide the opportunity to easily iterate and micro-edit outputs.

- Prioritize informative guardrails over restrictive guardrails. Example: flag potential risk. Empower users to determine the appropriate course of action, rather than completely blocking them from taking a certain action.

Embrace different strokes for different folks (and scenarios)

In our research, we prioritized inclusivity by actively involving neurodivergent users and those with English as a second language as experts in digital exclusion. We collaborated with them to envision solutions that ensure the technology benefits everyone from the outset.

This highlighted that different skills, abilities, situations, and use cases call for different modes of interaction. Design for these differences, and/or design for optionality. Among the ways to design generative AI systems with inclusivity in mind are:

- Prioritize multi-modality, or varied modes of interaction.

- Support user focus and help them efficiently achieve success. Example: via in-app guidance and tips.

- Mitigate language challenges. Example: include spell-check features or feedback mechanisms that don’t involve open text-fields.

Finally, make sure you’re engaging users in guiding the design of the system to make it work better for them. Ask them questions like, how did that interaction feel? Did it foster trust, support efficiency, feel fulfilling?

Ultimately, thoughtfully embedding a human touch into your burgeoning AI system helps foster support and trust with your team. It will motivate the adoption of a new technology that has potential to transform your business.

Stay tuned for more guidance and resources on designing for the human-at-the-helm coming in the new year.