Can An AI Assistant Make Us Faster and More Creative?

Generative AI can increase productivity in some cases – here's how to make the best of it.

Recently, I asked my 16-year-old son if he had heard of ChatGPT (“Um, yeah, dad.”) and I wondered if he was using it at school. He said it helps him generate templates or starting points for essays. I then asked him if ChatGPT makes him smarter. No, he said, just faster. So it made me curious whether I could apply generative AI (not just ChatGPT) to my own work. Would it make me faster or more creative, and how?

According to a recent Salesforce study, 40% of desk workers don’t know how to effectively use generative AI at work. And a study by the Nielsen Norman Group (NNG) shows that, on average, generative AI tools increase business users’ throughput by 66% when performing realistic tasks. I don’t know about you, but a 66% increase in productivity sounds pretty appealing to me!

Here’s a typical day for me: After making a fresh cup of coffee, I dig myself out of a pile of Slacks and emails. I go from meeting to meeting and often get to do my “real work” only late at night when I have some quiet time. My team members have reported similar experiences. The work can pile up and there’s only so much time in the day. So we decided to experiment with using what we call an AI assistant.

As Salesforce’s Ignite team, we are an innovation consulting group. We help our most strategic customers tackle their biggest challenges. With that in mind, we decided to ask ourselves three questions:

- How quickly can we get up to speed on a new project?

- Can we make our research phase faster?

- How might we translate our vision into inspiring visual assets?

For our experiment, we used a range of publicly available AI tools. We measured the time savings and scored each result on a scale of 1 to 10.

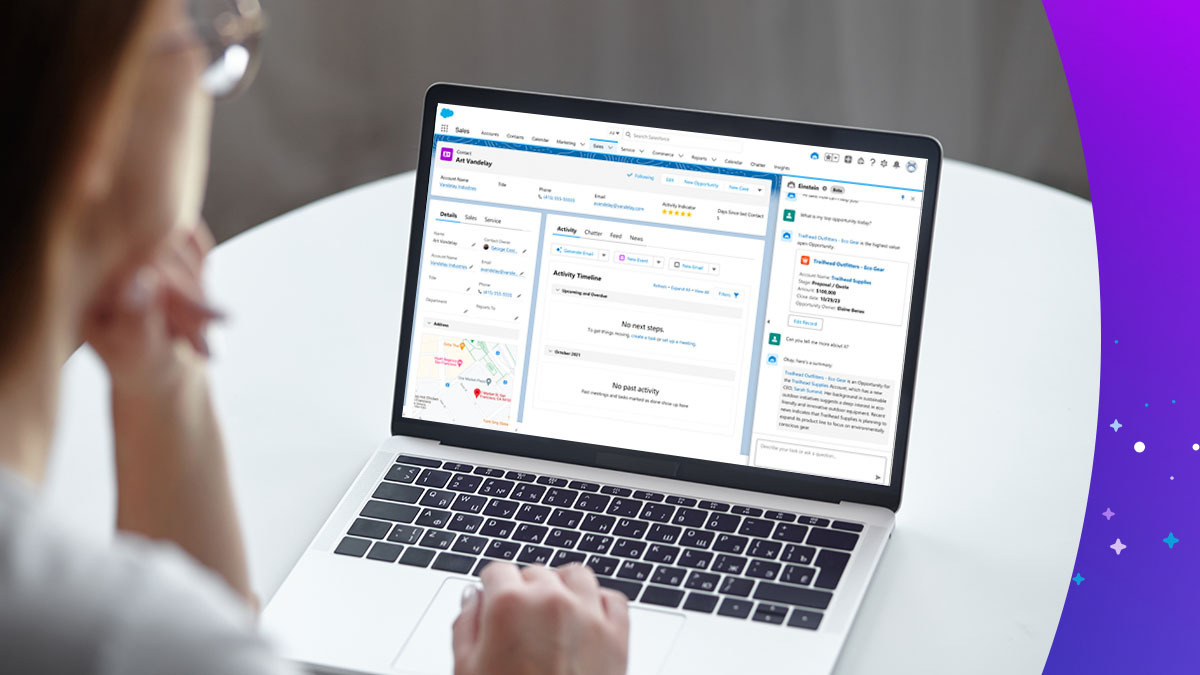

AI assistant as an onboarding buddy

On average, it takes our Ignite teams eight to 12 weeks from onboarding to our final readout. There’s no time to waste. So, the first 48 hours are crucial to understanding our customer’s business, their strategic context, and competition. But what if we can cut that time to only 48 seconds?

Get a full view of AI agents

Large language models (LLMs) are very good at summarization. Wouldn’t it be great if we could get an LLM to do all the secondary research for us and summarize it so we can get up to speed faster? That’s where it gets a bit tricky. What we found is that there are a few challenges you might run into:

Cutoff dates: All of the LLMs have a cutoff date. For example, as of this writing, ChatGPT can look up to only April 2023 and Claude2 to December 2022.

Hallucinations: Because the nature of LLMs is to come up with the next word in a sequence, when you give it a task (such as “Look up the company’s most recent investor relations presentation and analyze the trends.”), you’re really asking for what sounds like a correct response. You have to ground and refine your prompts so that they stick to the facts, and don’t make anything up. Even then, you have to double-check the results.

Results:

- Preferred Tools: To test this, our team created a Strategic Insights GPT on the OpenAI GPT store, and also experimented with Claude 2 (performed as a close 2nd) and Bard in 3rd place.

- Time Saved: 4-5 hours

- AI Sidekick Score: 7.5 – tools are getting better with fewer hallucinations, and can quickly pull relevant financial and competitive information.

Summary: These tools are rapidly improving and can now perform internet searches and read through the resulting links to find and collate relevant information. With the right prompting (which can be more quickly assured with customer GPT’s), we were less likely to receive erroneous/hallucinated information. The results can still be inconsistent. But, overall, these models are roughly able to perform at a junior associate level.

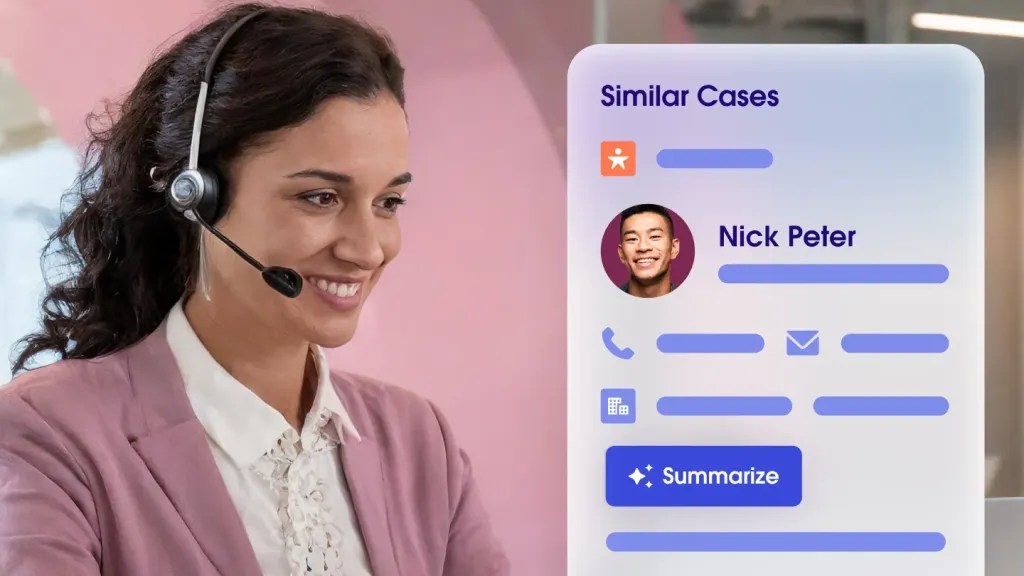

AI assistant as a co-researcher

One of the most crucial aspects of our work involves primary research. Employing a human-centered lens helps us gain a deeper understanding of our customers’ challenges as well as the needs, pains, and jobs-to-be-done of a diverse set of stakeholders. In a world where speed is the only constant, the mounds of data collected can leave even the most skilled researcher overwhelmed and pressed for time.

Typically, we conduct about 15 to 20 (or more) hour-long interviews per project. Transcripts are automated, thanks to tools such as Otter.ai or Google Hangouts. Yet synthesizing and analyzing all of these interviews can become tedious and time consuming, especially when there’s so much at stake. Having a research assistant to synthesize and analyze interview transcripts or key takeaways, identify top themes, and provide a set of meaningful insights would offer invaluable support to the process.

Using AI as a co-researcher enables a fast but smart starting point for researchers. There are a few gotchas, however:

Data privacy: When entering data into ChatGPT, or any of the other LLMs, that data can be used to train that LLM further and even appear in later queries. When dealing with sensitive information such as interview transcripts, it’s imperative that the data is handled accordingly. For our interview analysis, we leveraged our own Salesforce gateway, which has a trusted layer with a zero-retention policy and data masking. ChatGPT allows users to disable chat history and training, noting on their website that doing so will mean that “new conversations won’t be used to train and improve our models.”

Human context: When getting back to our synthesis and analysis, the results were helpful but lacking in context. Since we grounded the prompts properly, the models rarely hallucinated, but we did have to refine the output with more context, depth, and nuance.

Results:

- Preferred Tools: Einstein (custom integration)

- Time Saved: 12-18 hours

- AI Sidekick Score: 8 – Provided a fast and easy way to summarize and synthesize transcripts that is on par with a human result. However, the level of insights was sub-par compared to a human. The quotes it chose weren’t always relevant and sometimes misattributed.

Summary: Some argue that speed hinders research, but the essence of research demands intention, thoughtful learning objectives, and the necessary time for sense-making contextual findings. Our AI research assistant accelerates the process, providing prompt themes and insights minutes after inputting interview transcripts or data into Einstein. The added bonus is that prompt engineering also aids us in asking more beautiful questions. However, it’s crucial to note that while it’s a kickstarter and time-saver, it can’t replace the depth and resonance of human-led research aided by our brains and gut checks.

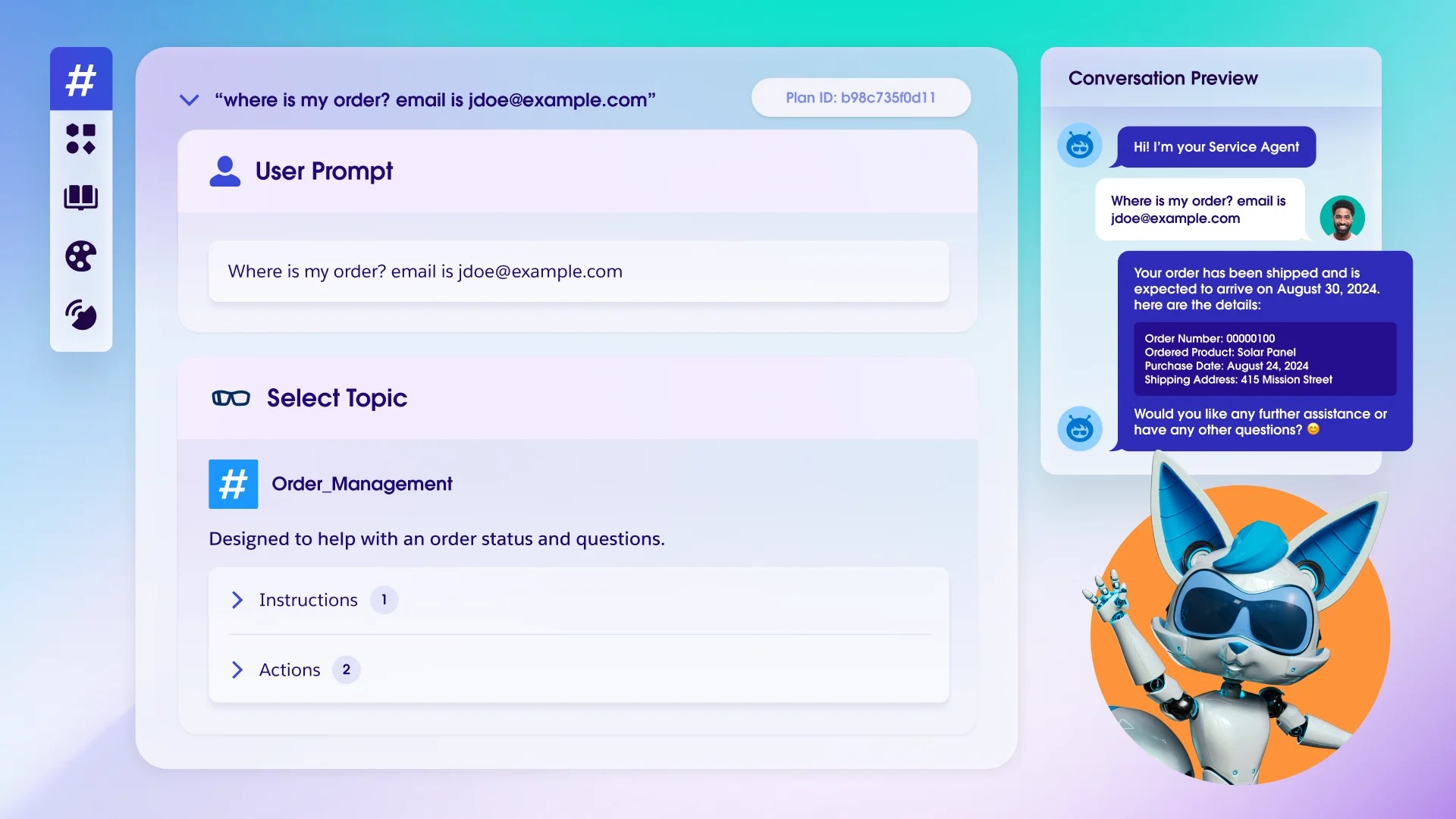

Discover Agentforce

Agentforce provides always-on support to employees or customers. Learn how Agentforce can help your company today.

AI assistant as a creative sounding board

When presenting a final vision to our customers, we often have our talented designers whip out some amazing visuals to illustrate what that vision might look like. Sometimes we have hand-drawn sketches, stock photography, or creatively edited images. One of the most exciting possibilities about generative AI is the ability to “imagine” visuals using words, and have them come to life.

We were blown away with the range of results, but, as always, there are areas that can trip you up:

Consistency and control: If you’re telling a story with several visuals and/or characters, you need consistency. Stock photography sites sometimes give you a series of photos with the same character that you can reuse. But tools like Midjourney can vary wildly from image to image. When working with DALL-E 3, it somewhat retains the context, but needs constant reminders to not stray too far. There are ways around it, but they require a much deeper immersion into more advanced features that you can get with Stable Diffusion – such as training a LoRA model with your own photos. Similarly, when describing an image (e.g. “a young man holding a tablet next to a semi-trailer truck”), the pose that emerges can vary from render to render, and it can take a lot of trial and error (and patience) to get what you want. Going deeper into features such as ControlNet and Inpainting can help, but require practice and knowhow.

Ethics and Bias: Since the data is trained on large amounts of data, that we usually don’t even know about, it can have bias embedded into it. It’s important to be aware of these biases when working with AI. For example, typing “medical doctor” on many AI platforms will skew heavily to white males. As the “AI creative directors” we need to ensure we recognize these biases. Similarly, we need to ensure that the sources of the images are ethical. Generative AI technology can “copy” an artist’s style without permission, which poses ethical and legal questions.

Results:

- Preferred Tools: Midjourney, Leonardo.ai, DALL·E 3

- Time Saved: None, but made up with style points!

- AI Sidekick Score: 8.5 – While the techniques we used didn’t really save us time, they opened up a whole new world of possibilities, and even helped with inspiration.

Summary: After using generative AI tools to help with the creative process, it’s hard to go back. In some cases, playing around with the tool created inspiration for the final product. The only problem is, it doesn’t make you faster. But of course, as designers, we’ll always fill the time we have to complete a task and try to push the limits of our creativity. But these capabilities give us new tools to add to our arsenal. They can even give you new skills. (I’m a terrible sketch artist!)

AI assistant is my new best buddy…in training

With these experiments, it was easy for us to grasp how generative AI can boost our productivity and increase our creativity, even if some of the gains were modest. But for a technology that is still so young, this has great promise. Almost any team can incorporate AI as their assistant to not only work faster, but add skills and even increase creativity – or at least help us get inspired.

Sasha Charlemagne and Yoni Sarason co-authored this blog.