Nazneen Rajani

Word embeddings inherit strong gender bias in data which can be further amplified by downstream models. We propose to purify word embeddings against corpus regularities such as word frequency prior to inferring and removing the gender subspace, which significantly improves the debiasing performance.

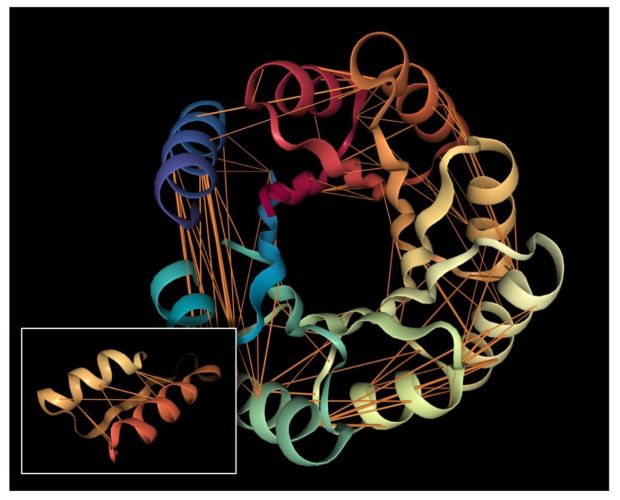

In our study, we show how a language model, trained simply to predict a masked (hidden) amino acid in a protein sequence, recovers high-level structural and functional properties of proteins.

We show that deep neural models can describe common sense physics in a valid and sufficient way that is also generalizable. Our ESPRIT framework is trained on a new dataset with physics simulations and descriptions that we collected and have open-sourced.

Many NLP applications today deploy state-of-the-art deep neural networks that are essentially black-boxes. One of the goals of Explainable AI (XAI) is to have AI models reveal why and how they make their…

Commonsense reasoning that draws upon world knowledge derived from spatial and temporal relations, laws of physics, causes and effects, and social conventions is a feature of human intelligence.