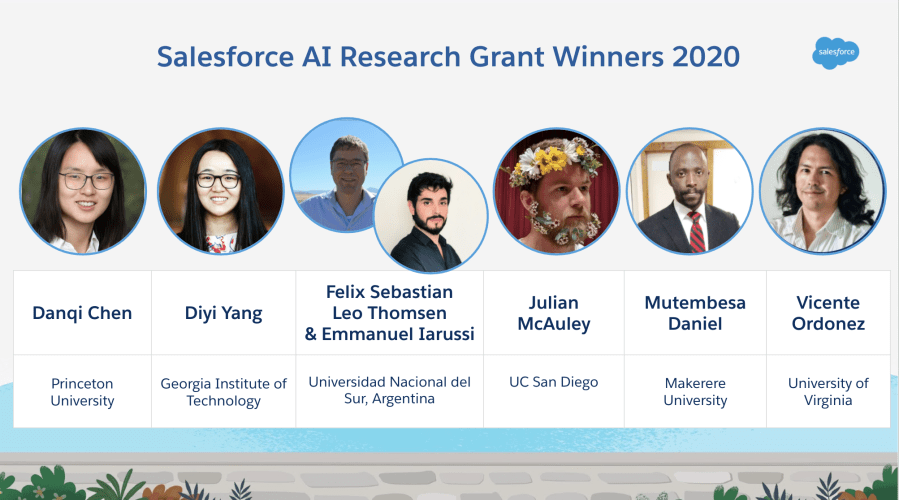

We are proud to announce the 2020 winners of our Salesforce AI Research Grant. Each of our winners will receive a $50K grant to advance their work and help us shape the future of AI. In our third year of offering this grant, we had a record-breaking number of applicants. Our reviewing committee, made up of our top AI Researchers, reviewed over 180 quality proposals from 30+ countries. From this competitive round of submissions, we decided to increase funding and ultimately awarded 6 research grants based on the quality of their proposals, novelty of the idea and relevance to the research topics we proposed.

Meet Our 2020 Grant Winners

Danqi Chen, Princeton University

Learning from Human Feedback on Machine-Generated Rationales for Natural Language Processing Tasks

We propose to develop a human-in-the-loop framework which aims to incorporate human feedback based on machine-generated rationales. Given an input, a model will generate an intermediate rationale for humans to provide feedback on. The feedback will be used to modify, transform, or supplement this intermediate representation so it becomes fully human interpretable. The model iterates between training and generating new outputs to collect feedback. This approach can afford great efficiency in model’s performance, as well as provide a new level of interpretability.

Diyi Yang, Georgia Institute of Technology

Linguistically Informed Learning with Limited Data for Structured Prediction

In the era of deep learning, natural language processing (NLP) has achieved extremely good performances in most data-intensive settings. However, when there are only one or a few training examples, supervised deep learning models often fail. This strong dependence on labeled data largely prevents neural network models from being applied to new settings or real-world situations. To alleviate the dependencies of supervised models on labeled data, different kinds of data augmentation approaches have also been designed. Despite being widely utilized in many NLP tasks like text classification, these techniques generally struggle to create linguistically meaningful, diverse and high-quality augmented samples when it comes to structured prediction tasks where a single sentence might have various interrelated structures and labels. To train better structure prediction models with limited data in few-shot learning, and alleviate their dependencies on labeled structured data, we propose a novel data augmentation method to create infinite training samples that constrains the augmented samples to be close to each other, based on local additivity. To further enhance learning with limited data, we will use these local additivity augmentation to semi-supervised few-shot learning by designing linguistically-informed self-supervised losses between unlabeled data and its augmentations. We demonstrate the effectiveness of these models in two classical structured prediction application tasks: NER and semantic parsing.

Felix Sebastian Leo Thomsen & Emmanuel Iarussi, Universidad Nacional del Sur, Bahía Blanca, Argentina

Bone-GAN: Towards an accurate diagnosis of osteoporosis from routine body CTs

Osteoporosis is commonly diagnosed as the deviation in bone mineral density by age and sex. Recent methods based on convolutional neural networks (CNNs) showed promising results to extract additional geometrical information in-vivo, potentially allowing to measure bone deterioration with much higher accuracy. However, the lack of high quality data renders these methods clinically not yet applicable. Hence, for the serious attempt to improve the diagnosis of osteoporosis, the training data must be extended with reliable artificial 3D bone. Generative Adversarial Networks (GANs) and their extensions have carved open many exciting ways to tackle well known and challenging medical image analysis problems. Based on GANs, we recently presented the first method that allows us to synthesize bone samples with disentangled structural parameters allowing to predict the output of osteoporosis therapies. There is great potential in this pioneer method to reach clinical quality requirements, to provide additional clinical patient information and support medical decisions. Our proposal describes the following workflow: A CNN to estimate bone geometry is applied as a routine image processing task in a clinical environment by daily updating the databases of the hospital information systems. The required CT images are automatically segmented before passing to the CNN. However, training of the CNN requires registered training images of very high preclinical vs. low clinical resolution, generally from ex-vivo studies of very limited availability. Thus, the key ingredient in our method is a bone GAN model to synthesize a necessary number of artificial high resolution training data. Artificial pairs of bones are then obtained from imputation of in-vivo CT noise. The final objective is the integration of the implemented models at a partner research hospital.

Julian McAuley, UC San Diego

Improving Recommender Systems via Conversation and Natural Language Explanation

We propose research at the intersection of two current topics in recommender systems and explainable ML, that seeks to overcome limitations in both. First, there is a growing interest in conversational recommendation, i.e., where a user receives recommendations via sequential interactions with a conversational agent. Second, there is a growing interest in making ML systems more interpretable. We argue that both topics currently fall short of their high-level ambitions. Explainable AI struggles to come up with notions of interpretability that are useful to end users; meanwhile conversational recommendation struggles to yield datasets that meaningfully map to the goal of more empathetic recommendation or allow for straightforward offline training. To address both of the above issues, we seek a form of conversational recommendation where users iteratively receive recommendations along with natural language explanations of what was recommended; users can then give feedback (either through natural language or by via a pre-defined ontology of feedback types), that will gradually help them ‘hone in’ on more useful and empathetic recommendations. We believe that recommender systems provide an ideal testbed to expand research on conversation, explanation, and interpretability, partly due to the wealth of explanatory text that can be used to build datasets (i.e., reviews), as well as the inherently sequential nature of many

recommendation problems.

Mutembesa Daniel, Artificial Intelligence Research Lab, Makerere University

The F.A.T.E of AI in African agriculture. A case study of AI algorithms used to assess creditworthiness for digital agro-credit to smallholder farmers in Uganda.

Fairness, Accountability, Transparency and Ethics (F.A.T.E) has become a central theme in Artificial Intelligence (AI) and digital innovation due to the uneven distribution of negative impacts to underserved and underrepresented communities. Nowhere is it more critical to understand the social impacts of AI and deploy equitable automated decision systems than in smallholder agriculture which is the lifeline for 500 million households in Sub- Saharan Africa. I propose to study the state-of-F.A.T.E for AI-based innovations in agriculture, specifically decision algorithms for digital agro-credit to smallholder farmers. A key output of my investigation will be a fairer framework for developing equitable algorithms to determine farmer creditworthiness, as a step towards achieving last-mile financial inclusion and food resilience of poor underserved farmer groups.

Vicente Ordonez, University of Virginia

Addressing Biases on Generic Pretrained Models

Advances in machine learning (e.g., deep neural networks) have revolutionized many disciplines. Large scale computing and data availability have allowed to train models that can serve as generic feature extractors for both images and text. For instance it is common to re-use the Resnet-50 architecture pretrained on a subset of the Imagenet dataset for a variety of downstream tasks such as detection, segmentation, captioning, navigation, among many others. In the natural language processing domain, models such as BERT have served a similar purpose as generic feature extractors for tasks such as entailment, classification, sentiment analysis, and reading comprehension. As such, these generic feature extractors have become latent variables for all these systems. However, it is yet not clear:

What are the useful features that these systems encode? What are the risks of re-using these features without a clear understanding of their significance? Our project aims to uncover and mitigate biases in this type of generic feature extractors for both images and text.