How to Build Ethics into AI - Part II Research-based recommendations to keep humanity in AI

In this article, I will focus on mechanisms for removing exclusion from your data and algorithms. Of all the interventions or actions we can take, the advancements here have the highest rate of change.

Published: April 2, 2018

This is part two of a two-part series about how to build ethics into AI. Part I focused on cultivating an ethical culture in your company and team, as well as being transparent within your company and externally. In this article, I will focus on mechanisms for removing exclusion from your data and algorithms. Of all the interventions or actions we can take, the advancements here have the highest rate of change. New approaches to identifying and addressing bias in data and algorithms continue to emerge which mean that customers must stay abreast of this emerging technology.

Remove Exclusion

There are many ways for bias to enter an AI system. In order to fix them, it is necessary to recognize them in the first place.

Understand the Factors Involved

Identify the factors that are salient and mutable in your algorithm(s).

Digital lending apps take in tons of alternative data from one’s mobile device such as daily location patterns, social media activity, the punctuation of text messages, or how many of their contacts have last names to approve or reject loans or charge a higher interest rate. For example, smokers and late-night internet users are bad at repaying loans. This data is typically collected without the user’s awareness as permission is buried in the terms of service (TOS).

Both engineers and end users are uncomfortable with a “black box.” They want to understand the inputs that went into making the recommendations. However, it can be almost impossible to explain exactly how an AI came up with a recommendation. In the lending example above, it’s important to remember that correlation does not equal causation and to think critically about the connections being drawn when significant decisions are being made, e.g., home loan approval.

What are the factors that, when manipulated, materially alter the outcome of the AI’s recommendation? By understanding the factors being used and turning them on/off, creators and users can see how each factor influences the AI and which result in biased decisions.

This guide by Christoph Molnar for making black box models explainable is one attempt at digging deeper. Another method was demonstrated by Google researchers in 2015. They reverse engineered a deep-learning-based image recognition algorithm so that instead of spotting objects in photos, it would generate or modify them in order to discover the features the program uses to recognize a barbell or other object.

Microsoft’s Inclusive Design Team has added to their design tools and processes a set of guidelines to recognize exclusion in AI. The remaining recommendations in this section are inspired by their Medium post on these five types of bias.

Prevent Dataset Bias

Identify who or what is being excluded or overrepresented in your dataset, why they are excluded, and how to mitigate it.

Do a Google search on “3 white teens” and then on “3 black teens” and you will see mostly stock photos for the white teens and mostly mug shots for the black teens. This is the result of a lack of stock photos of black teenagers in the dataset but it’s easy to see how an AI system would draw biased conclusions about the likelihood of a black vs. white teen being arrested if it was trained just on this dataset.

Dataset bias results in over- or underrepresentation of a group. For example, your data set may be heavily weighted towards your most advanced users, under representing the rest of your user population. The result could be creating a product or service your power users love while never giving the rest of your users the opportunity to grow and thrive. So what does this look like and how do you fix it?

- What: The majority of your data set are represented by one group of users.

How: Use methods like cost-sensitive learning, changes in sampling methods, and anomaly detection to deal with imbalanced classes in machine learning. - What: Statistical patterns that apply to the majority may be invalid within a minority group.

How: Create different algorithms for different groups rather than one-size-fits-all. - What: Categories or labels oversimplify the data points and may be wrong for some percentage of the data.

How: Evaluate using path-specific counterfactual fairness. This is a form of decision making for machines where a judgement about someone is identified as fair if it would’ve made the same judgement had that person been in a different demographic group along unfair pathways, (e.g., if a woman were a man, or a white man were black), as explained by Parmy Olson. - What: Identify who is being excluded and the impact on your users as well as your bottom line. Context and culture matters but it may be impossible to “see” it in the data.

How: Identify the unknown unknowns, as suggested by researchers at Stanford and Microsoft Research.

Prevent Association Bias

Determine if your training data or labels represent stereotypes (e.g., gender, ethnic) and edit them to avoid magnifying them.

In a photo dataset used to train image recognition AI systems, researchers found the dataset had more women than men in photos connected with cooking, shopping, and washing while photos containing driving, shooting, and coaching had more men than women.

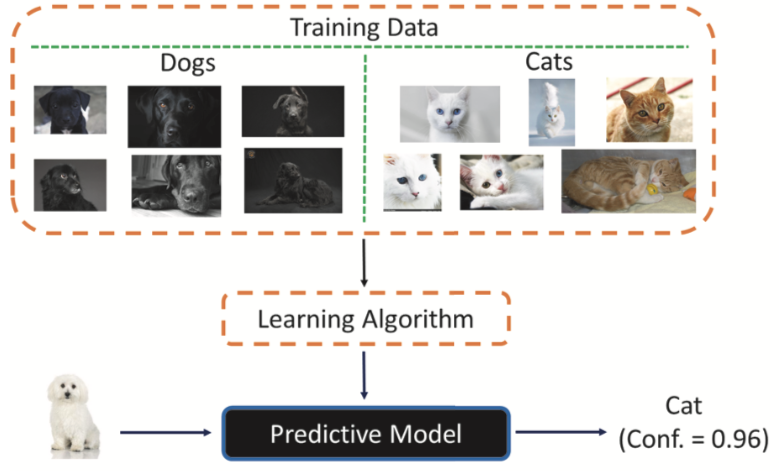

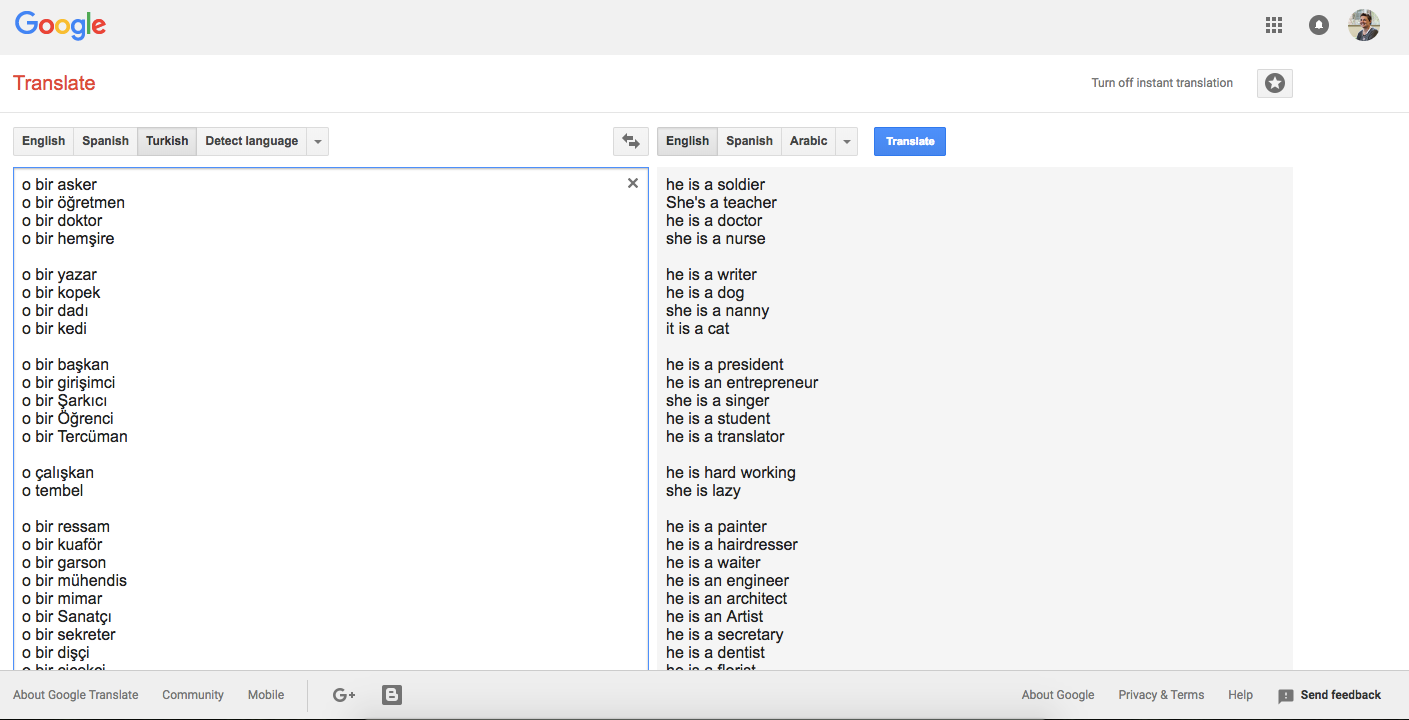

Association bias is when the data used to train a model perpetuates and magnifies a stereotype, which isn’t limited to images. For example, in gender neutral languages such as Turkish, Google Translate shows gender bias by pairing “he” with words like “hardworking,” “doctor,” and “president” and “she” with words like “lazy,” “nurse,” and “nanny.” Similar biases have also been found in Google News search.

Unfortunately, machine learning apps using these biased datasets will amplify those biases. In the photo example, the dataset had 33% more women than men in photos involving cooking but the algorithm amplified that bias to 68%! This is the result of using discriminative models (as opposed to generative models), which increase the algorithms accuracy by amplifying generalizations (bias) in the data. Laura Douglas explains this process beautifully if you want to learn more.

The result of the bias amplification means that simply leaving the datasets as-is because it represents “reality” (e.g., 91% of nurses in the US are female) is not the right approach because AI distorts the already imbalanced perspective. This makes it more difficult for people to realize that there are many male nurses in the job force today and they tend to earn higher salaries than women, for example.

Researchers have found ways to correct for unfair bias while maintaining accuracy by reducing gender bias amplification using corpus-level constraints and debiasing word embeddings. If your AI system learns over time, it’s necessary to regularly check the results of your system to ensure that bias has not once again crept into your dataset. Addressing bias is not a one-time fix; it requires constant vigilance.

Prevent Confirmation Bias

Determine if bias in the system is creating a self-fulling prophecy and preventing freedom of choice.

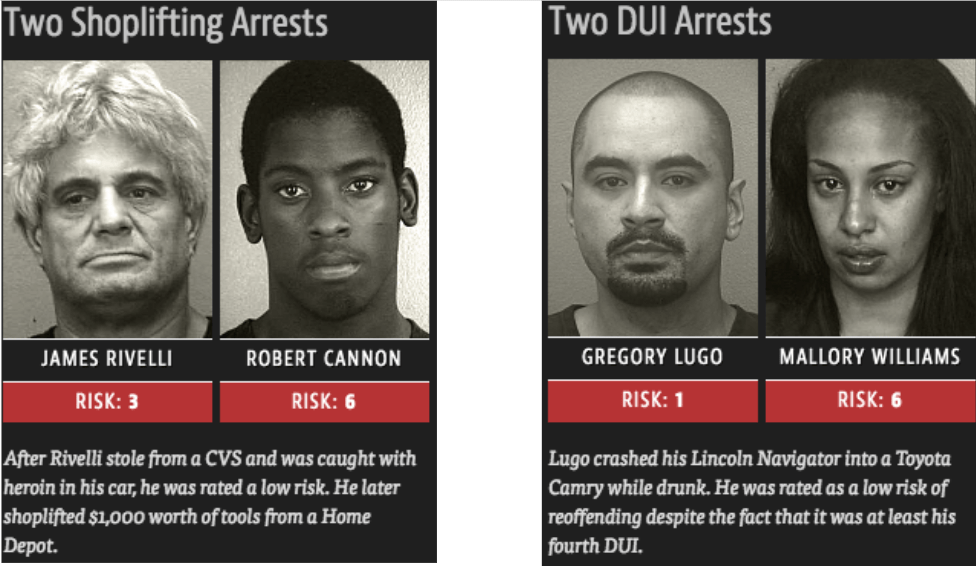

The Compas AI system used by some court systems to predict a convicted criminal’s risk of reoffending has shown systemic basic against people of color resulting in denial of parole or longer prison sentences.

Confirmation bias reinforces preconceptions about a group or individual. This results in an echo chamber by presenting information or options similar to what an individual has chosen by them or for them previously. In the example above, an article by ProPublica demonstrated that the algorithm used by Compas AI was more likely to incorrectly categorize black defendants as having a high risk of reoffending and more likely to incorrectly categorize white defendants as low risk. Another study showed that untrained Amazon Mechanical Turk workers using only six factors to predict recidivism were just as accurate as Compas using 157 factors (67% vs. 65% accuracy, respectively).

Even when race wasn’t one of the factors used, both were more likely to inaccurately predict that black defendants would reoffend and white defendants would not. That is because certain data points (e.g., time in jail) are a proxy for race creating runaway feedback loops that disproportionately impact those that are already socially disadvantaged.

The Compas system is just one example but a segment of the population face similar bias from many of the systems discussed here including predictive policing, lending apps, ride hailing services, and AI assistants. One can only imagine how overwhelming it would be to face bias and exclusion on multiple fronts each day. As with other types of bias, you must test your results to see the bias happening, identify the biasing factors, and then remove them in order to break these runaway feedback loops.

Prevent Automation Bias

Identify when your values overwrite the user’s values and provide ways for users to undo it.

An AI beauty contest was expected to be unbiased in its assessments of beauty but nearly all winners were white.

Automation bias forces the majority’s values on the minority, which harms diversity and freedom of choice. The values of the AI system’s creators are then perpetrated. In the above example, an AI beauty contest labeled primarily white faces as most beautiful based on training data. European standards of beauty plague the quality of photos of non-Europeans today, resulting in photos of dark-skinned people coming out underexposed and AI systems having difficulty recognizing them. This in turn results in insulting labels (e.g., Google Photos “gorilla incident”) and notifications (e.g., “smart” cameras asking Asians if they blinked). Worse, police facial recognition systems disproportionately affect African Americans.

To begin addressing this bias, one must start by examining the results for values-based bias (e.g., training data lacks diversity to represent all users or broader population, subjective labels represent the creator’s values).

In the earlier example of lending apps making decisions based on whether or not someone is smoker, the question must be asked if this represents the values of the creators or majority of the population (e.g., smoking is bad therefore smokers are bad). Return to the social-systems analysis to get feedback from your users to identify if their values or cultural considerations are being overwritten. Would your users make the same assessments or recommendations as the AI? If not, modify the training data, labels, and algorithms to represent the diversity of values.

Mitigate Interaction Bias

Understand how your system learns from real-time interactions and put checks in place to mitigate malicious intent.

Inspirobot uses AI and content it scrapes from the web to generate “inspirational” quotes but the results range from amusingly odd to cruel and nihilistic.

Interaction bias happens when humans interact with or intentionally try to influence AI systems and create biased results. Inspirobot’s creator reports that the bot’s quotes are a reflection of what it finds on the web and his attempts to moderate the bot’s nihilistic tendencies have only made them worse.

You may not be able to avoid people trying to intentionally harm your AI system but you should always conduct a “pre-mortem” to identify ways in which your AI system could be abused and cause harm. Once you have identified potential for abuse, you should put checks in place to prevent it where possible and fix it when you can’t. Regularly review the data your system is using to learn and weed out biased data points.

Where to Go from Here

Bias in AI is a reflection of bias in our broader society. Building ethics into AI is fixing the symptoms of a much larger problem. We must decide as a society that we value equality and equity for all and then make it in happen in real life, not just in our AI systems. AI has the potential to be the Great Democratizer or to magnify social injustice and it is up to you to decide which side of that spectrum you want your product(s) to be on.

Thank you Justin Tauber, Liz Balsam, Molly Mahar, Ayori Selassie, and Raymon Sutedjo-The for all of your feedback!