Earlier this year, Danielle Cass and I ran a workshop with 23 ethical AI practitioners from 15 organizations and shared out insights of what they are doing that has been successful and the open questions or challenges they are working through. A few months later, Matt Marshall, Founder of VentureBeat, invited us to conduct a similar workshop at VentureBeat Transform with the goal of expanding the representation of practitioners and moving the conversation to the next level.

On July 10th, 36 practitioners from 29 organizations came together for a two-hour, high-speed workshop to solve four challenges we, as a discipline, are working on:

- How to operationalize ethics

- How to scale across our organizations

- How to measure impact and success

- How ethics practitioners can support, share and expand each other’s work amid skepticism

Operationalizing Ethics

Three primary categories arose in the breakout groups: 1) Socialization/education, 2) Processes to kickoff every project, and 3) Tools or processes throughout the product development.

Socialization/Education

One of the first tasks for people in ethical/responsible roles involves educating everyone in the organization, socializing ethical considerations including how to work responsibly. Through presentations, and formal trainings (e.g inclusion in machine learning classes) etc., the key is making it relevant and specific to the organization.

Psychological safety was identified as a necessary precursor for organizations to have these difficult conversations in a productive way. Ethical discussions can result in visceral responses if individuals feel their values are being attacked. Demonstrating a constructive debate and providing a framework for prioritizing risks and responsibilities (see Taxonomy discussion further down) can help de-escalate tensions and enable productive dialog.

Publishing insights in easy-to-access locations for coworkers to leverage was also necessary. For sales reps, who want to tell customers how their organization creates responsible AI, it’s critical they have ready access to relevant slides and talking points. This mitigates the risk of exposing internally-facing documents with sensitive information. Similarly, publishing insights to customers and others working in this field raises everyone’s awareness and helps move the conversation forward.

Processes to Kick Off Every Project

Participants shared a number of ways they explore the problem space at the beginning of a project including Consequence-Scanning Workshops, Scenario Planning, or one of the many toolkits or frameworks available today.

It’s imperative to begin by being clear on the goals to be accomplished, then identify the spectrum of intended and unintended consequences. Use an agreed-upon taxonomy to prioritize all of the risks identified. It’s unlikely every possible risk can be mitigated so it’s important to have a mechanism to objectively prioritize those that must be addressed.

One of the companies represented described their process as follows:

- Create a Global Data Map

Create a global data map of all of the data various stakeholders in the company and organize by sensitivity and data source. Next, identify all the possible activities teams may use the data. Finally, identify any legal (e.g., GDPR), ethical and best practice considerations for each of those activities. It’s important to keep the matrix dynamic to accommodate new data and new uses.

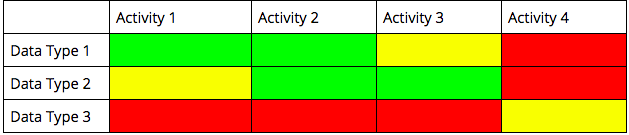

2. Create a Risk Profile for Global Legal and Ethical Data Use

The global data map is a table that contains rows and columns for the different categories of data and identified activities for data use. Next, color-code the cells red, yellow or green to indicate the degree of constraints for each scenario. (See Figure 1 below).

Red = Data may not be used for that purpose

Yellow = Required risk/benefit analysis and documentation prior to approval

Green = Data may safely be used for that purpose

Teams wishing to use data for purposes highlighted in yellow must go through a risk/benefit analysis and review with the data ethics team. Some of the questions asked in the review will include:

- Why are the data relevant to the purpose of your project?

- How many people on the team will have access to the data if it is shared with you?

- How are you going to avoid disparate impact on protected classes?

3. If the team is approved to use the data, a follow up review is required. Some of the questions in the follow up include:

- Did your assumptions match the outcomes?

- When you analyzed the data for disparate impact, what were the findings?

- If you found disparate impact, how did you adjust your project to address it?

Tools & Processes to Use Throughout the Product Development Lifecycle

Review boards and discussion forums with experts were identified as valuable resources in the previous workshop. Participants in this workshop noted the significance of providing context-specific and tangible recommendations. Through checklists, which serve as useful references, questions to ask and reminder vehicles, everyone walks away with clear next steps and greater success.

The importance of documentation throughout is crucial and provides transparency, accountability, and consistency. These include term definitions, governance mechanisms (e.g., model cards, datasheets), feedback from reviews and decision outcomes. Using approachable and simple language avoids miscommunication and encourages compliance.

Scaling across the organization

Key themes that emerged in this breakout group included:

- the importance of co-locating ethics practices within existing infrastructure, processes and frameworks;

- incorporating ethics into incentives and OKRs;

- instilling ethics-by-design knowledge across a workforce.

Ethics Infrastructure/Processes

As described in our shared insights from our February workshop, leveraging existing infrastructure (like product review processes) into which you can embed ethics was a best practice echoed by the VentureBeat workshop participants. Whether you are leveraging an existing process to highlight ethics or creating new processes in the software, the product development lifecycle or product evaluation, it’s critical to abstain from overburdening the business. Processes must be lightweight, adaptable and easy-to-use.

Relationships between engineering and product must be navigated delicately as ethics efforts scale across a company. Often, product managers and engineering managers may not be aligned, and because many things can change between the ideation phase and final production, introduction of new elements may impede this.

Incentives

Several companies are starting to explore establishing incentive structures in order for ethics to be built into employees’ roles. Another approach is building ethics into OKRs by making it one of the pillars for performance reviews.

This breakout discussion also examined how to build penalties around unethical behavior, specifically related to data use. Participants agreed scaling ethics efforts beyond ethics roles will require technical solutions, including open-source tools.

Ethics-By-Design

Building a business case for “ethics-by-design” is a compelling path forward for scaling ethics. Similar to the standardization of privacy processes based on privacy law, the key to an “ethics-by-design” effort is to highlight the true risks with reviews boards and certification programs.

Education and training is pivotal but remains the biggest challenge for all tech companies in scaling “ethics-by-design.”

One tech ethics practitioner shared their program: In addition to a required in-person modular training, introduction of a monthly ethics video series addressing serious ethics issues ranging from data access to corruption is presented in a fun, engaging way. This has proven successful with strong participation across the company.

Measuring impact and success

This breakout group brainstormed measures and metrics to evaluate the ethics impact on their work. Drawing from security scoring frameworks like Common Vulnerabilities and Exposures (CVE) for identifying information or cybersecurity vulnerabilities and exposure risks, similarly, ethical risks could be calculated where the types of exposures are identified as being additive or even multiplicative. As a result, a system could have a positive score overall but the sub-components could highlight that specific populations are more vulnerable and at greatest right of exposure to harm. Having a taxonomy to rate risks will prioritize what must be addressed prior to launch.

In addition, the use of Red Teams, which are neutral groups tasked with identifying blind spots and data vulnerabilities to ethically hack the product should be considered. The product team can now identify the harm when it happens and find ways to mitigate the potential risk(s).

Differential Privacy was introduced to minimize exposure of PII (personally identifiable information). A reference from Pier Paolo Ippolito, who did not attend the workshop, defines it this way:

One participant noted the hiring recruiters’ ability to identify specific archetypes the company was predisposed to hiring vs. others, allowing them to quantify their actions and make changes.

Three challenges in measurement were identified:

- Using forms or other measures that allow individuals to self-identify risks or score their actions can be biased so other measures must be included to triangulate.

- Those negatively impacted by automated decision-making are largely unaware they have been harmed and therefore cannot ask for redress or remediation. Lack of reporting makes it difficult to identify examples of actual harms.

- Differences in countries, cultures, religions, etc. make it difficult to identify a consistent metric to evaluate ethical decisions and/or harms. Context is critical so organizations have to develop varied measures, relevant to each situation.

Critics of ethics

As the field of ethics in tech expands, the community of practitioners recognize the urgency to support each other, especially in the face of recent attacks from their efforts. A few participants had personally been criticized, maligned, or mischaracterized on social media or during public events. Twice as many participants had witnessed these things happen to their peers. In one particular example, a colleague, expected to attend the workshop cancelled having recently been doxxed and her life threatened by a powerful group critical of her company.

Three primary categories of criticisms and associated questions we need to address:

- Individual criticism can be motivated by malice or disagreement. In the former, real threats of harm are made against the individual usually in the form of doxxing. The latter is usually about factionalism. The criticisms can be based on “ideological purity and whose ethics matter the most. Another form of disagreement are on issues of attribution and building on the work of others.

Questions: How do we effectively attribute our points of view to those whose work we’re building upon? Where do we obtain legitimacy and permission to speak? How do we manage questions around jealousy, legitimacy, and accountability within the space where some are being given a a bigger megaphone or bigger speaking platforms?”

- Public criticism of the places where we work, including accusations of “ethics washing” or “selling out” when one is speaking at public events.

Questions: What kind of opportunities are we willing to forego because we cannot engage in meaningful discussion? What are the trade-offs of being in the public eye, vulnerable, and transparent versus going underground because of the threatening attacks?

- Press attacks in the form of mischaracterization, misquoting, and misattribution. Sensational headlines, catchy soundbites, space constraints, and press deadlines can lead those with the best of intentions to inaccurate descriptions of our work.

Questions: How does one communicate the complexity and nuance of our work in concise soundbites? How do we ensure that members of the media under pressure to meet deadlines capture the details that really matter when they don’t know which details matter? How do we ensure they give appropriate credit in the publications when a simple soundbite sans credit is catchier?

It is important to acknowledge that no one is implying those working in this field are above questioning or disagreement; however, when the criticism crosses a line to involve personal attacks or threats of harm, it is not acceptable. Meaningful discussion and debate can only happen when both sides are willing to engage in constructive dialog rather than ad hominem attacks. This website has several wonderful resources to help those dealing with or at risk of online harassment. A few additional ideas were identified to move discussions forward in productive ways:

- Build a network to do background checks of people who are reaching out for individual interviews or events. This can help individuals identify those with malicious intent and avoid them.

- Identify attribution standards to appropriately assign credit for the work that we’re building upon.

- Identify or create safe spaces to share and learn from one another

- Identify strategies to advocate for ourselves within organizations when some may be risk averse in the face of attacks

- Develop a bat signal to alert this community of practice when someone needs help so others can rally around and provide them the support they need during the most difficult times.

Going far together

The two hours we spent together flew by and the community of practitioners left excited for what is next. We all recognize the importance of sharing our work to learn from each other and to provide accountability among our peers. This workshop is over but our engagement is only beginning.