TL;DR

LogAI is an open-source library designed for log analytics and intelligence. It can process raw logs generated by computer systems and support log analytics tasks such as log clustering and summarization, as well as log intelligence tasks such as log anomaly detection and root-cause analysis. LogAI is compatible with log formats from different log management platforms and adopts the OpenTelemetry log data model to facilitate this compatibility. LogAI provides a unified model interface for popular time-series, statistical learning and deep learning models, and also features an interactive GUI. With LogAI, it’s easy to benchmark popular deep learning algorithms for log anomaly detection without having to spend redundant effort processing logs.

Background

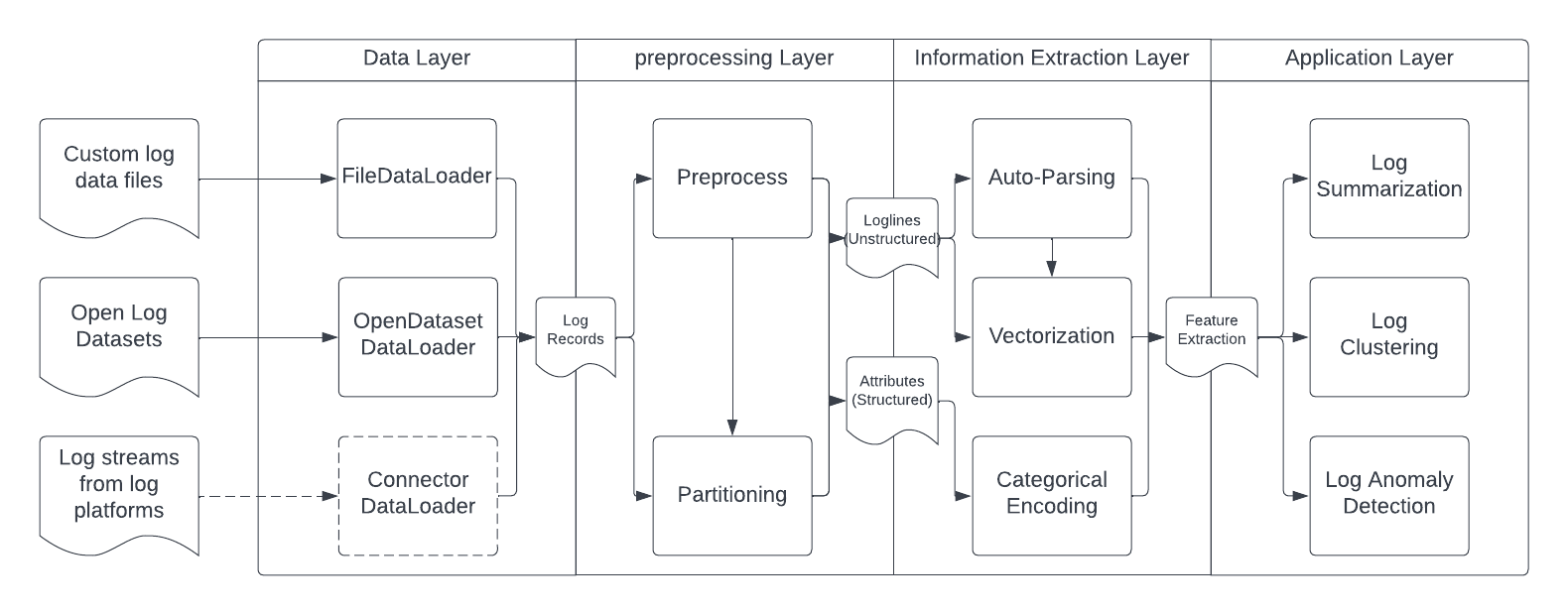

Logs are typically unstructured or semi-structured free-form text messages generated by computer systems. Log analysis involves using a set of tools to process logs and generate human-readable operational insights. These insights can help developers quickly understand system behavior, detect issues, and find root causes, etc. Traditional log analysis relies on manual operations to search and examine raw log messages. In contrast, AI-based log analysis employs AI models to perform analytical tasks such as automatically parsing and summarizing logs, clustering logs, and detecting anomalies. The typical workflow for AI-based log analysis is shown in Figure 1.

Figure 1. Common Log Analysis Process Workflow.

People may have different needs when working on log analysis in academic and industrial settings depending on their roles. For example, machine learning researchers need to quickly run benchmarking experiments against public log datasets and reproduce results from peer research groups to develop new log analysis algorithms. Industrial data scientists need to easily run existing log analysis algorithms on their own log data and select the best algorithm and configuration combination as their log analysis solution. Data and software engineers need to integrate the selected algorithm into production smoothly and efficiently. Unfortunately, there are currently no existing open-source libraries that can meet all of these requirements. To address these needs, we introduce LogAI, a library designed to better conduct log analysis for various academic and industrial use cases.

Challenges

Currently, log management platforms lack comprehensive AI-based log analysis capabilities. Although there are some open-source AI-based log analysis projects, such as logparser [log parser] for automated log parsing and loglizer [https://github.com/logpai/loglizer] for automated anomaly detection, there is no single library that can provide capabilities for multiple analytical tasks. Performing different analytical tasks in one place present several challenges:

No unified log data model for analysis. Different logs are in varying formats, which require customized analysis tools for different log formats and schemas. It is difficult to generalize analytical algorithms without a unified data model.

Redundant effort in data preprocessing and information extraction. Different log analysis algorithms are implemented in separate pipelines. For various tasks, developers need to create multiple preprocessing and information extraction process modules, which can be redundant even for different algorithms within the same task.

Difficulty in managing log analysis experiments and benchmarking. Experiments and benchmarking are essential in research and applied science. Currently, there is no unified workflow management mechanism to conduct log analysis benchmarking experiments. For instance, several research groups proposed deep learning-based methods for log anomaly detection. However, reproducing their experimental results is challenging for other organizations or groups, even if they have access to the code.

LogAI Highlights

To address the challenges outlined above, we are introducing LogAI, a comprehensive toolkit for AI-based log analysis. LogAI supports a variety of analytical tasks, such as log summarization, clustering, and anomaly detection.

LogAI adopts the OpenTelemetry data model. OpenTelemetry is widely used by organizations to instrument, generate, collect, and export log data. By adopting this unified data model, LogAI can process logs from different platforms that follow the same OpenTelemetry data model, creating a standardized log schema for all downstream analytical tasks.

LogAI unifies workflow interfaces to connect each processing step. Instead of building separate pipelines for different analytical tasks, LogAI offers unified interfaces for preprocessing and information extraction, thus avoiding duplicated efforts to process the same log source for various analytical tasks.

LogAI unifies algorithm interfaces for easy expansion. LogAI offers a unified interface for each processing step, which makes it easy for users to switch between algorithms. Users can effortlessly switch between different models for log parsing, vectorization, clustering, or anomaly detection by adjusting the configuration, without affecting other parts of the pipeline. This feature empowers scientists and engineers to carry out benchmarking, control experiments, compare results, and select the best approach with ease.

LogAI uses abstracted configurations for application workflows. LogAI utilizes configuration files (.json or .yaml) to control application workflows, rather than passing arguments. This approach helps standardize the entire machine learning (ML) process and improves reproducibility. Users can easily manage workflow settings and algorithm parameters in separate configuration files. This design also streamlines the prototype-to-production transformation.

LogAI provides a GUI for interactive analysis. In addition to evaluation metrics, industrial log analysis also relies heavily on visual inspection to validate results. LogAI addresses this need by providing a graphical user interface (GUI) portal for interactive validation and analysis. Users can create a local instance of the portal and start using LogAI without writing a single line of code, simplifying the process for non-technical users.

LogAI Architecture

LogAI contains two main components: LogAI core library and LogAI GUI. The LogAI GUI module contains the implementation of a GUI portal which connects to log analysis applications in the core library and interactively visualizes the analysis results. While the LogAI core library’s architecture can be described as in Figure 2. LogAI core library contains four layers:

Figure 2. LogAI Architecture.

Data Layer: data layer contains components of data loaders and log data model. As we know raw log data could be in different formats. The data layer adopted a unified log data model defined by OpenTelemetry [*]. LogAI also provides a variety of data loaders to read and convert raw log data into unified format, stored as LogRecordObjects.

Preprocessing Layer: Preprocessing contains components that clean and partition logs. There are two major categories: preprocessors and partitioners. Preprocessors provide functionalities to clean logs, extract entities (ids, labels, etc.). Preprocessors separate raw logs into the unstructured logline and structured log attributes. Partitioners partition and regroup raw logs into log events that being used as input of machine learning models, including grouping loglines in the same time interval, generating log sequence under the same identity, etc. LogAI also provides customized preprocessors for specific open log datasets, such as BGL, HDFS, Thunderbird logs. Customization of preprocessors and partitioners can be extended to support other log formats.

Information Extraction Layer: Information extraction layer contains modules to convert log records into vectors that can be used as input of machine learning models for the actual analytical tasks. LogAI implemented four components in the information extraction layer to extract information from the log records and convert logs to the target formats. Log parser component implements a series of automatic parsing algorithms in order to extract templates from the input loglines. Log vectorizer implements a bag of vectorization algorithms to convert free-form log text into numerical representations for each logline. Categorical encoder implements algorithms that encoding categorical attributes into numerical representations for each logline. Last but not least, feature extractor implements methods to group the logline level representation vectors into log event level representations.

Analysis Layer: The analysis layer contains modules that conduct the analysis tasks, including but not limited to semantic anomaly detector, time-series anomaly detector, sequence anomaly detector, clustering, etc. Each analysis module provides a unified interface for multiple underlying algorithms.

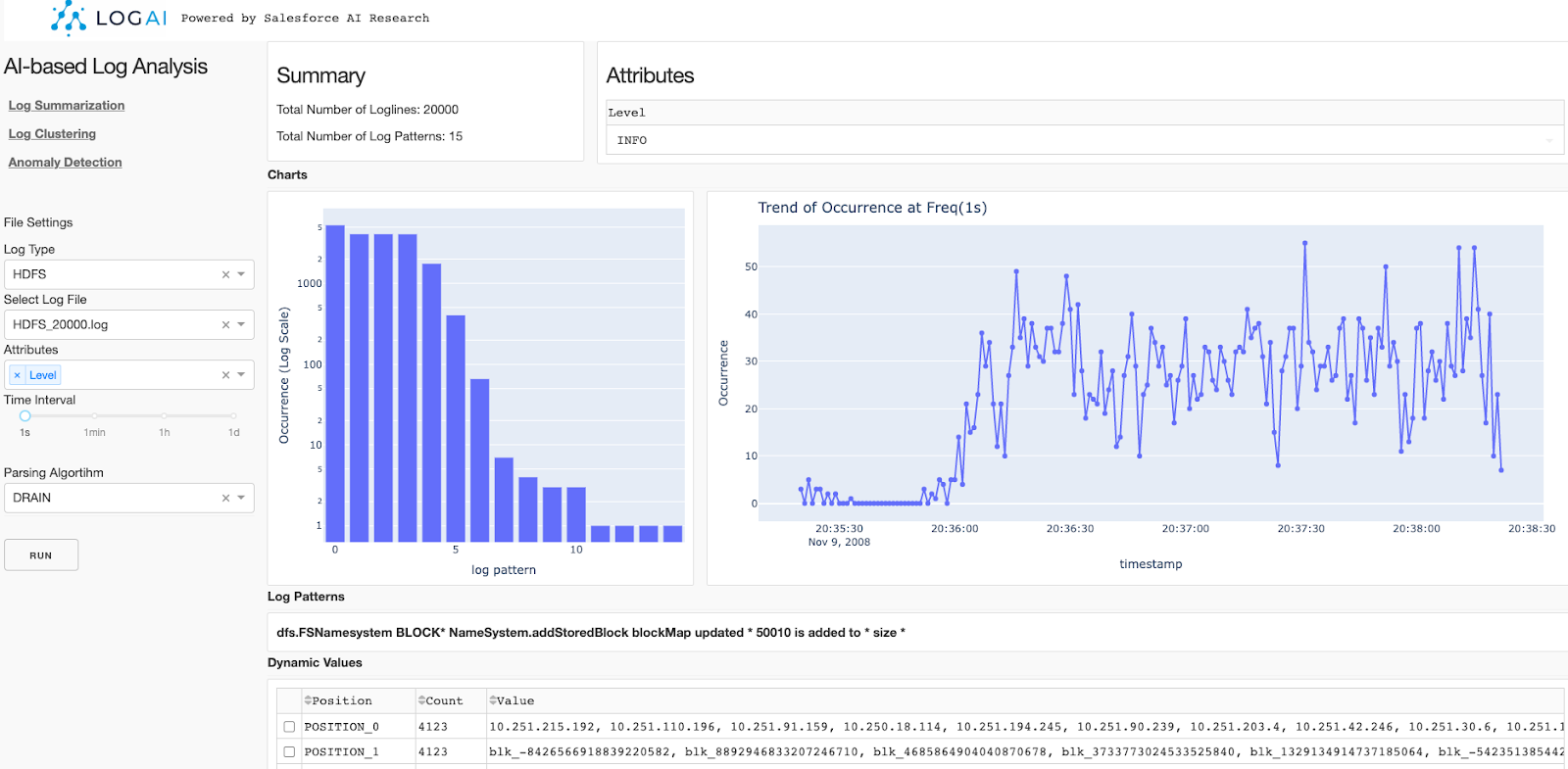

Use LogAI GUI portal to examine your logs

By following the instructions to install and setup LogAI, you can access the LogAI portal via http://localhost:8050/. Or you can go to our demo website to use the portal. The portal supports three analytical tasks: log summarization, log clustering and anomaly detection. The control panel is on the left side of the page, where you can select the target log type and select a corresponding log file. You can also select algorithms for each intermediate step. For example, for anomaly detection, you can customize algorithms and parameters for log parsing, vectorization, categorical encoding and anomaly detection. After clicking run, the result will be shown on the right side of the page.

Figure 3. Snapshot of using LogAI GUI portal for log summarization.

Use LogAI to benchmark Deep-learning based anomaly detection

Besides conducting analysis tasks based on statistical machine learning algorithms, LogAI can also be used to benchmark popular deep-learning based log anomaly detection algorithms. LogAI supports deep learning models such as CNN, LSTM and Transformer. In the library we integrated deep log anomaly detection application workflows to conduct log anomaly detection tasks with these deep learning models.

In the experiment, we utilize two popular public log datasets HDFS and BGL. Both datasets come with anomaly labels. We benchmark three base models: CNN, LSTM and Transformer. Based on our research on existing work, we break down the configuration into several binary categories: 1) supervised or unsupervised learning, 2) log parsing or not, 3) sequential log representation or semantic log representation. In addition, the LSTM model can be either unidirectional without attention or bidirectional with attention. CNN model is 2-D convolution with 1-D max pooling. The transformer model is set to be a multi-head single-layer self-attention model and trained from scratch.

These configurations cover all popular algorithms incorporated in LOGPAI/deep-loglizer toolkit, and LogAI can cover even more configuration variations. The benchmarking results are shown in Table 1. We can see LogAI performs equally good if not better than deep-loglizer on both HDFS and BGL datasets. Meanwhile LogAI covers other configuration variations so that the results present the full performance picture of existing deep learning log anomaly detection algorithms. The best performance is from supervised bidirectional LSTM model with attention, with sequential log representation, which is similar to the architecture of LogRobust.

Table 1. Results of deep learning based log anomaly detection on HDFS and BGL datasets. Compare with original results reported by LOGPAI/Deep-Loglizer. LogAI can easily achieve similar performance, while it easily covers a larger variation of algorithm combination configurations across LSTM, CNN and Transformer based algorithms.

Explore More

Salesforce AI Research invites you to dive deeper into the concepts discussed in this blog post (see links below). Connect with us on social media and our website to get regular updates on this and other research projects.

- Learn more: Check out our technical report and demo paper, which describes our work in greater detail

- Code: Check out our code on GitHub

- Follow us on Twitter: @SalesforceResearch, @Salesforce

- Blog: To read other blog posts, please see blog.salesforceairesearch.com.

- Main site: To learn more about all of the exciting projects at Salesforce AI Research, please visit our main website at salesforceairesearch.com

About the Authors

Doyen Sahoo is a Senior Manager, Salesforce AI Asia. Doyen leads several projects pertaining to AI for IT Operations or AIOps, working on both fundamental and applied research in the areas of Time-Series Intelligence, Causal Analysis, Log Analysis, End-to-end AIOps (Detection, Causation, Remediation), and Capacity Planning, among others.

Qian Cheng was a Lead Applied Researcher in Salesforce AI Asia, working on a variety of AIOps problems, including Observability Intelligence, Log AI and Time-Series intelligence applications, in addition to contributing to AIOps research efforts.

Amrita Saha is a Senior Research Scientist at Salesforce AI Asia, working on applied machine learning and NLP research in AIOps including Root Cause Analysis, Anomaly Detection and Log Analysis as well as fundamental research on NLP Language Models.

Wenzhuo Yang is a Lead Applied Researcher at Salesforce AI Asia, working on AIOps research and applied machine learning research, including causal machine learning, explainable AI, and recommender systems.

Chenghao Liu is a Senior Applied Scientist at Salesforce AI Asia, working on AIOps research, including time series forecasting, anomaly detection, and causal machine learning.

Gerald Woo is a Ph.D. candidate in the Industrial PhD Program at Singapore Management University and a researcher at Salesforce AI Asia and his research focuses on deep learning for time-series, including representation learning, and forecasting.

Steven HOI is VP / Managing Director of Salesforce AI Asia and development activities in APAC. His research interests include machine learning and a broad range of AI applications.