Responsible AI Matters: How Leading Practitioners Are Implementing It

We brought together 24 practitioners from 17 organizations to talk about a success in their practice they believed others could emulate or a challenge they were facing & wanted to brainstorm with others. We summarize the insights from 2 breakout discussions: Governance/Accountability & Incentives.

Since 2018, Salesforce has organized informal workshops among Responsible AI (RAI) practitioners to create a community, share best practices and insights, and offer each other support and guidance in this challenging field. Following each workshop, we have shared these insights to create better experiences for businesses, consumers, and the public when leveraging AI (Dec. 2018, Jul. 2019, Feb. 2019, Jan. 2020, Jun. 2022).

Unfortunately, the COVID-19 pandemic brought an end to in-person gatherings for a time. So, we were thrilled to gather once again for our first in-person RAI practitioner workshop in June of this year! We brought together 24 practitioners from 17 organizations, 13 of whom gave lightning talks either about a success in their practice that they believed others could emulate or a challenge they were facing and wanted to brainstorm with other practitioners. Attendees armed with the standard post-it notes and sharpies jotted down questions, ideas, and topics for the breakouts. We then voted on the topics of most interest and broke into two groups for deeper discussion: Governance/Accountability and Incentives.

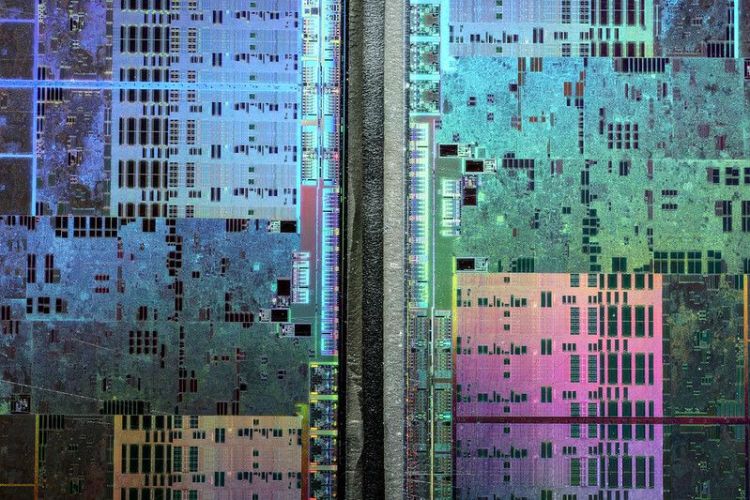

![Photos of 21 of the participants at the workshop. [Left to right, top to bottom] Kathy Baxter, Salesforce; Danielle Cass, Microsoft; Don Heider, Markkula Center for Applied Ethics; Irina Raicu, Markkula Center for Applied Ethics; Mark Van Hollebeke, Microsoft; Yoav Schlesinger, Salesforce; Chloe Autio, The Cantellus Group; Joaquin Quiñonero Candela, LinkedIn; Polina Zvyagina, Meta; Krishnaram Kenthapadi, Fiddler.ai; Toni Morgan, TikTok; Ian Eisenberg, Credo.ai; Luca Belli, Twitter; Trey Causey, Indeed; Eric Yu, SAS; Ben Roome, Ethical Resolve; Ganka Hadjipetrova, Airbnb; Kevin Bankston, Meta; Camille Taltas, Warner Music Group; Jacob Metcalf, Ethical Resolve; Javier Salido, Airbnb](https://www.salesforce.com/blog/wp-content/uploads/sites/2/2022/08/markkula-participants.png?strip=all&quality=95)

Governance/Accountability

The breakout group that focused on Governance and Accountability identified two main mechanisms across companies for identifying risks and harms in AI systems: (1) Triage and reviews by RAI teams and (2) Notifications by employees or impacted stakeholders.

(1) Triage and reviews by RAI teams

Each of the companies that participated in the workshop has developed or are developing an ethical review or impact assessment process for their AI systems, such as Microsoft’s Responsible AI Framework which was released shortly before the workshop. As resources for such assessments are not unlimited, several participants discussed the importance of triaging to prioritize the models or systems that have the highest potential of creating harm and determine the allocation of resources required to mitigate it.

Many of the AI review processes that were discussed involve working with AI developers to answer a series of questions or complete a written assessment and then submitting those results to the RAI team or some other expert body for review and discussions. While participants highlighted the value of the deep thought and dialogue that can be prompted by these engagements with development teams, the challenges of scaling such highly qualitative assessments across large engineering organizations with varying levels of experience with RAI issues were also noted. This has led some participants to explore how to automate parts of the review process through better collection of key metadata about AI systems early in the development lifecycle to drive initial risk assessments, and through the development of standardized mitigations to match to specific types of AI products or AI risks, while still working to ensure that there is an appropriate level of manual review by experts throughout the process. Some participants have also begun to invest in more RAI training for their development teams so that front-line engineers and managers can more effectively and accurately answer the questionnaires and assessment forms on which RAI reviews rely.

Not all companies will have the instrumentation and tooling to enable this type of automation but for those that can, automating at least part of the process can create greater efficiency, scale, and accuracy. Several teams also talked about how early and active engagement can help ensure that development teams are fully bought in, building their “moral imaginations,” and critical issues aren’t missed by automation.

(2) Notifications by employees and stakeholders

Regardless of the triage process, all companies represented recognize the importance of stakeholder input and most have invested in user experience research, community juries, and/or listening mechanisms. One participant noted the shortcoming of user research and user-submitted reports: Those impacted often don’t realize they have been impacted or understand how in order to accurately report that adverse impact. For them, it can be just how the system works. They haven’t witnessed how the system works for others or know that the system was designed to work differently from what they have experienced. Engaging with watchdog groups, organizing ethical bug bounties, and enabling constant monitoring of what the AI system is actually doing for all sub-populations were suggested as important mechanisms for identifying when harm can and does occur, and identifying solutions for how to mitigate it.

Many participants noted that employees can be key stakeholders in identifying potential risks and harms. Companies have created multiple mechanisms for employees to report when they suspect or observe potential harms including anonymous ethics hotlines, RAI group mailing lists, internal social media channels dedicated to ethical use, and ethics advisory councils. It was noted that a consistent, repeatable, transparent process for reviews also requires reporting the results to employees and documenting them for future reference.

One of the participants noted that their company does structured feedback sessions with their customers but also has many channels for unstructured feedback that they are constantly monitoring. The team is trained to know what to look for in the reports, how to interpret them, and how to engage with the community.

Incentives

Incentive structures to reward RAI development and enforce consequences for unethical behavior are a frequent topic of discussion among RAI practitioners. Incentives are just one part of a mature RAI culture but they can be one of the hardest pieces to implement for several reasons. First, it can be difficult to know what is a motivating incentive, especially across different roles, parts of the company, or regions of the world if you are a global company. Secondly, incentives can result in new, unintentional behaviors that may or may not be desirable. And finally, monitoring can present a challenge: Are you rewarding the behavior you want to reward in a timely manner? Can the behavior you wish to reward continue without an immediate incentive in place or does the change revert over time? The breakout group discussed the need for internal and external pressures to incentivize change.

(1) Internal Incentives

Participants identified employee activism as one of the incentives for change. Many companies are training employees on ethical use and empowering them to say something when they see concerning technology development or use. But incentives have to be aligned throughout the entire organization and contextual to each role. This means that product managers and engineers need to be rewarded when they stop unethical features from launching even if it means potential lost revenue. One participant raised the concern that sometimes teams must build features that support ethical use but the company feels like they cannot charge for them because it would be like charging for seat belts – these are features that should just come standard as part of the product or service. As a result, ethics may appear to be a cost center. Executives must be better educated about how these features can not only result in customer success and avoid harm but may also result in a larger total addressable market.

Similarly, some participants suggested that sales teams should be rewarded when they catch and stop a sale from going through that could violate the company’s principles. The RAI team at one company has implemented training and certification for their sales teams in order to sell their AI products.

Some attendees mentioned how challenging it can be to incentivize executives that may prefer to limit RAI investment to what is required by law, or that perceive potential risks in delving too deep into potential AI-related harms where it’s not required. Several participants found that they had the most success when they highlighted how accepting some short-term cost and risk from establishing robust RAI practices now will substantially reduce costs and risks in the long-term when it comes to meeting the emerging expectations of policymakers and the public. Without a long-term vision and investment, the RAI practice risks remaining reactive, running from one urgent issue to the next rather than building a sustainable culture of AI ethics that can ultimately succeed in meeting those expectations.

(2) External Pressures

Potential and existing regulations are the most frequently cited and top-of-mind external incentive for change. Many multinational companies are paying attention to regulations being proposed in the EU, China, Brazil, and elsewhere. A recent survey by Accenture showed that 97% of C-suite executives across 17 geographies and 20 industries believe that AI regulations will impact their businesses to some extent. Several participants noted that highlighting the need to prepare for compliance with emerging AI regulations can be a useful way to begin conversations about the need for responsible AI practices if your company is building AI that will be impacted by the regulation. Some participants, however, also pointed out how being compliant with regulation isn’t necessarily the same as being ethical, and suggested regulatory requirements as a floor rather than a ceiling for defining best practices.

Other external forces identified were boards (both boards of directors and ethical advisory boards), shareholders, watchdog groups, negative press, and competitors. In one survey, IBM found that 75% of executives rated AI ethics as important in 2021, as opposed to only 50% of executives in 2018. It is very likely that as more regulations are implemented, companies that can demonstrate they not only work responsibly but have the tools and resources to help their customers work responsibly will have a significant advantage over companies that cannot.

Conclusion

The event ended on a positive note with many of the individuals that have been participating in these workshops since 2018 noting the difference in the number of organizations with RAI teams, the sophistication of questions being asked, and the number of success stories being shared. Although the number of headlines about harmful AI has increased rather than decreased over the years, attendees felt more confident that we are close to standards for RAI and that we have an even larger community of practitioners to learn from.