Imagine you were a young child and wanted to ask about something. Being so young (and assuming you are not exceedingly precocious), how would you describe a new object, the name of which you have yet to learn? The intuitive answer: point to it!

Surprisingly, neural networks have the same issue. Neural Networks typically use a pre-defined vocabulary on the order of hundreds of thousands of words. Even so, these pre-defined vocabularies cannot capture all the words we use in natural language! Inevitably, new and rare words arise that the networks struggle to understand and use properly. To remedy this, we introduce the pointer sentinel mixture model, which allows neural networks to ‘point’ to relevant, recently observed words. This can help in machine translation and language modeling as rare words (e.g. uncommon names) tend to come up fairly often.

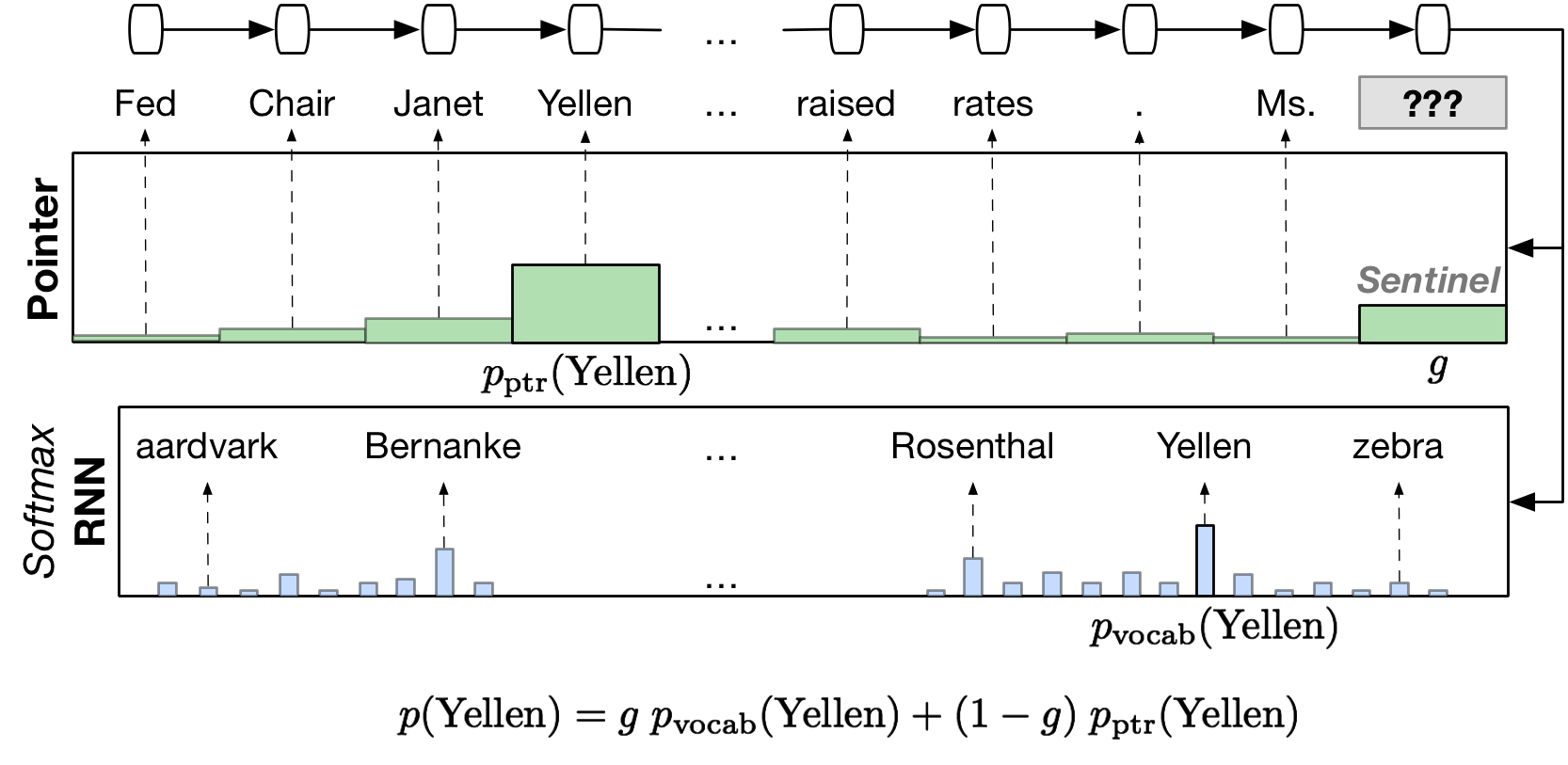

In the example above, an RNN is trying to guess the next word after seeing a set of sentences like “Fed Chair Janet Yellen … raised rates. Ms. ???“.

At the bottom: The neural network tries to guess using the fixed set of words it has in its vocabulary. This knowledge may be more related to the distant past, such as old references or training data involving the previous Fed Chair Ben Bernanke.

At the top: The pointer network is able to look at the story’s recent history. By looking at the relevant context, it realizes that ‘Janet Yellen’ will likely be referred to again, so the network, also realizing that ‘Janet’ is a first name and that it needs a last name, points to ‘Yellen’.

By mixing the two information sources – by first “pointing” to recent relevant words using context and then otherwise using the RNN’s internal memory and vocabulary if there’s no good context – the mixture model is able to get a far more confident answer.

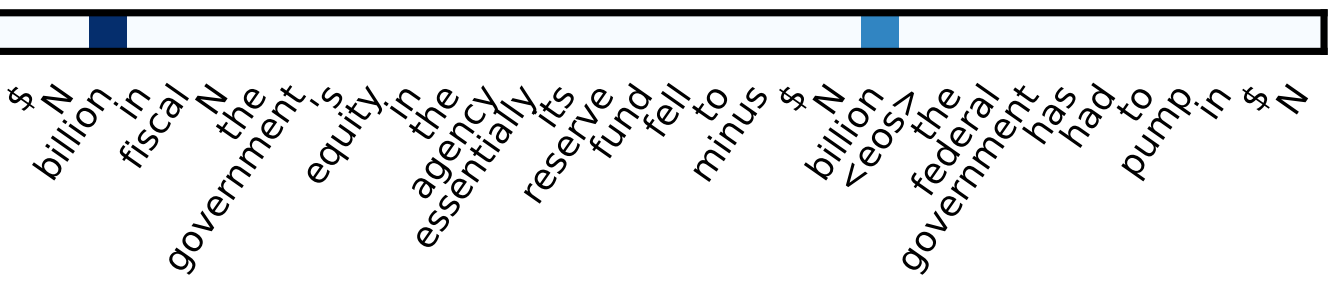

While it’s obvious to use this in the case of names, which may be rarely seen or even entirely new to the neural network, the pointer sentinel method works on a broad class of other word types too. One of the most interesting is units of measure. While units of measure, such as [kilograms, tons] or [million, billion], are very common words, the neural network uses the pointer mechanism heavily!

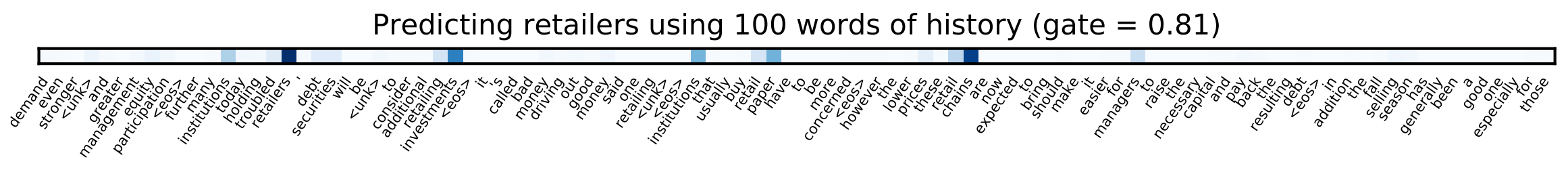

Even for common nouns, the pointer can be heavily relied upon. In predicting “the fall season has been a good one especially for those retailers“, the pointer component suggests many words from the historical window that would fit – retailers, investments, chains, and institutions – by pointing to them with various levels of intensity.

Results

In our model, we use the pointer sentinel mixture model together with an LSTM (Long Short-Term Memory, just a special type of RNN). We call this the pointer sentinel LSTM. Using the pointer sentinel LSTM, we are able to achieve state of the art results on two different datasets.

Penn Treebank (PTB)

The first, a version of the Penn Treebank dataset [link maybe], is a language modeling dataset that has been worked on for many years. In language modeling, perplexity is a measure of how uncertain the model is in regards to the next word. As such, the lower the perplexity, the better. This pointer sentinel LSTM is able to achieve a lower perplexity than other comparable models. It also does so using fewer parameters, which means that the pointer sentinel LSTM learns more efficiently as well.

| Model | Params | Validation | Test |

|---|---|---|---|

| Zaremba et al. (2014) – LSTM (medium) | 20M | 86.2 | 82.7 |

| Zaremba et al. (2014) – LSTM (large) | 66M | 82.2 | 78.4 |

| Gal (2015) – Variational LSTM (medium) | 20M | 81.9 ± 0.2 | 79.7 ± 0.1 |

| Gal (2015) – Variational LSTM (medium, MC) | 20M | − | 78.6 ± 0.1 |

| Gal (2015) – Variational LSTM (large) | 66M | 77.9 ± 0.3 | 75.2 ± 0.2 |

| Gal (2015) – Variational LSTM (large, MC) | 66M | − | 73.4 ± 0.0 |

| Kim et al. (2016) – CharCNN | 19M | − | 78.9 |

| Zilly et al. (2016) – Variational RHN | 32M | 72.8 | 71.3 |

| Merity et al. 2016 – Our Pointer Sentinel-LSTM (medium) | 21M | 72.4 | 70.9 |

*Lower perplexity is better.*

WikiText

The Penn Treebank is considered small and old by modern dataset standards, so we decided to create a new dataset — WikiText — to challenge the pointer sentinel LSTM. The WikiText dataset is extracted from high quality articles on Wikipedia and is over 100 times larger than the Penn Treebank. WikiText also features a more diverse and modern set of topics, including Super Mario!

= Super Mario Land = Super Mario Land is a 1989 side @-@ scrolling platform video game , the first in the Super Mario Land series , developed and published by Nintendo as a launch title for their Game Boy handheld game console . In gameplay similar to that of the 1985 Super Mario Bros. , but resized for the smaller device 's screen , the player advances Mario to the end of 12 levels by moving to the right and jumping across platforms to avoid enemies and pitfalls . Unlike other Mario games , Super Mario Land is set in Sarasaland , a new environment depicted in line art , and Mario pursues Princess Daisy . The game introduces two Gradius @-@ style shooter levels . At Nintendo CEO Hiroshi Yamauchi 's request , Game Boy creator Gunpei Yokoi 's Nintendo R & D1 developed a Mario game to sell the new console . It was the first portable version of Mario and the first to be made without Mario creator and Yokoi protégé Shigeru Miyamoto . Accordingly , the development team shrunk Mario gameplay elements for the device and used some elements inconsistently from the series . Super Mario Land was expected to showcase the console until Nintendo of America bundled Tetris with new Game Boys . The game launched alongside the Game Boy first in Japan ( April 1989 ) and later worldwide . Super Mario Land was later rereleased for the Nintendo 3DS via Virtual Console in 2011 again as a launch title , which featured some tweaks to the game 's presentation . Initial reviews were laudatory . Reviewers were satisfied with the smaller Super Mario Bros. , but noted its short length . They considered it among the best of the Game Boy launch titles . The handheld console became an immediate success and Super Mario Land ultimately sold over 18 million copies , more than that of Super Mario Bros. 3 . Both contemporaneous and retrospective reviewers praised the game 's soundtrack . Later reviews were critical of the compromises made in development and noted Super Mario Land 's deviance from series norms . The game begot a series of sequels , including the 1992 Super Mario Land 2 : 6 Golden Coins , 1994 Wario Land : Super Mario Land 3 , and 2011 Super Mario 3D Land , though many of the original 's mechanics were not revisited . The game was included in several top Game Boy game lists and debuted Princess Daisy as a recurring Mario series character .

The pointer sentinel mixture model gives neural networks a better grasp of natural language, and this assists models on a range of tasks from question answering to machine translation. By better handling rare and out of vocabulary words, our model is improving the way in which neural networks may be applied to future applications. We have also provided a modern and realistic language modeling dataset, WikiText, which we hope novel language modeling work may use.

Citation credit

Stephen Merity, Caiming Xiong, James Bradbury, Richard Socher

Pointer Sentinel Mixture Models