Generative artificial intelligence (AI) and LLMs (large language models) have turned the world of conversation design upside down. Going from rule-based, predictable chatbots to designing for generative, open-ended AI technology that handles natural language processing and understanding requires a new mindset.

When experimenting with conversational AI, it’s easy to get lost in the innovation and forget the principles behind it. That’s when resources, such as our Conversation Design Guidelines for Salesforce Lightning Design System (SLDS) can provide direction in this new era. We have four key insights from the design guidelines that will help you get started.

What is conversational AI?

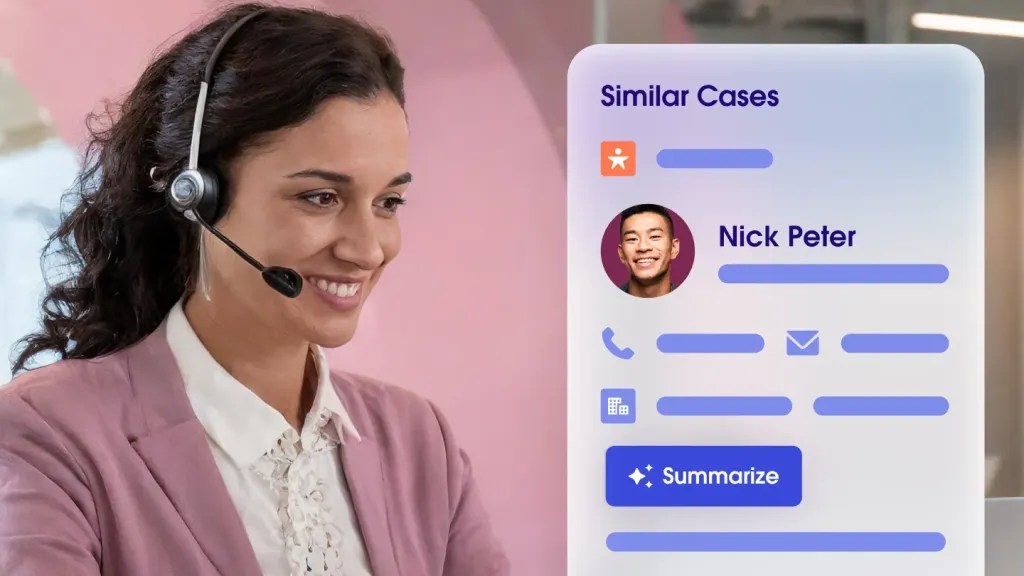

Conversation design (CXD) is the process for designing turn-taking interactions for conversational interfaces, such as chatbots and voicebots. You’ve likely experienced a basic chatbot when requesting, say, account information through your bank’s website or submitting a help request to troubleshoot a computer glitch. This type of bot has specific parameters and can respond only to requests that fall within those boundaries.

In the generative AI world, interactions between users and machines mimic the natural language and intent of human conversations. Designing for conversational AI is the process of creating such interactions, which involves natural language processing, understanding meaning or intent, generating natural language replies, and the ability to refine how the AI responds to future prompts.

Start with these conversational AI design guidelines:

These guidelines should serve as a primer for designers as they grow accustomed to working with conversational interactions. Knowing what paths a user might take and considering possible content variations can help inform designs.

1. Use existing patterns

For example, when creating prompts to generate emails, we might use existing conversational patterns for chatbot greetings. When we think about the tone and voice of our outputs, we can also use persona guidelines. Or, we might ask whether the content the LLM generates should be more professional or personable. How might we represent either of those traits linguistically? We also need to know what the experience looks like for users across devices or in different real-world environments.

Get a full view of AI agents

Regardless of your role, you might have to create conversational copy or interactive flows for your product or for prompt testing. Even if you don’t have training in linguistics and you’re not responsible for writing all the copy, you’ll still interact with the tool that produces that content. Many of the same rules of conversational interaction still apply.

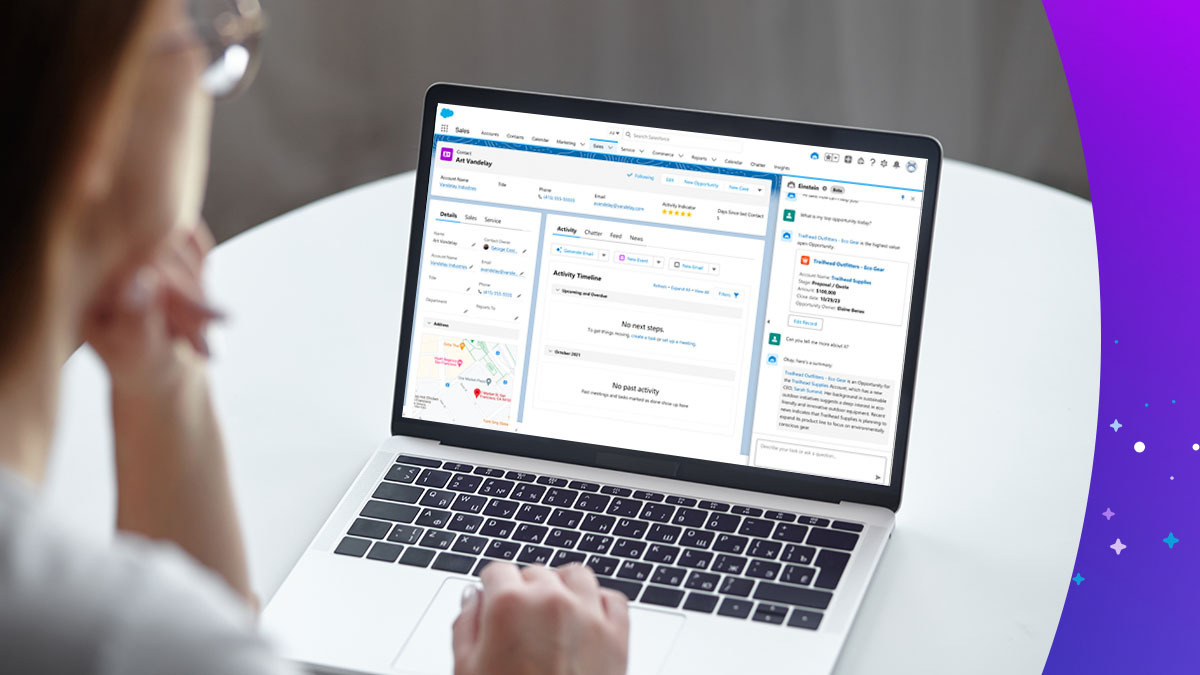

As a senior conversation designer at Salesforce, I’ve worked on a variety of features and products involving conversational AI and generative AI. Let’s look at a few key areas of the guidelines and examples of how they’ve influenced my team’s approach to conversational AI.

While the following examples relate to bot conversation and static prompts, the examples and the guidelines do still apply in turn-taking experiences for copilots. The guidelines were intended for designing turn-taking interactions, so they absolutely apply.

2. Make bot personality traits universal

The Bot Personality section of the SLDS guidelines advises designers to consider defining personality basics first. It’s not about making bots have human-like personalities, though. Instead, focus on the bot’s language and choose phrasing that acknowledges the interaction. Other bot developers and designers offer similar advice and suggest thinking about what functions a bot can fulfill and how it can help a user reach their goal.

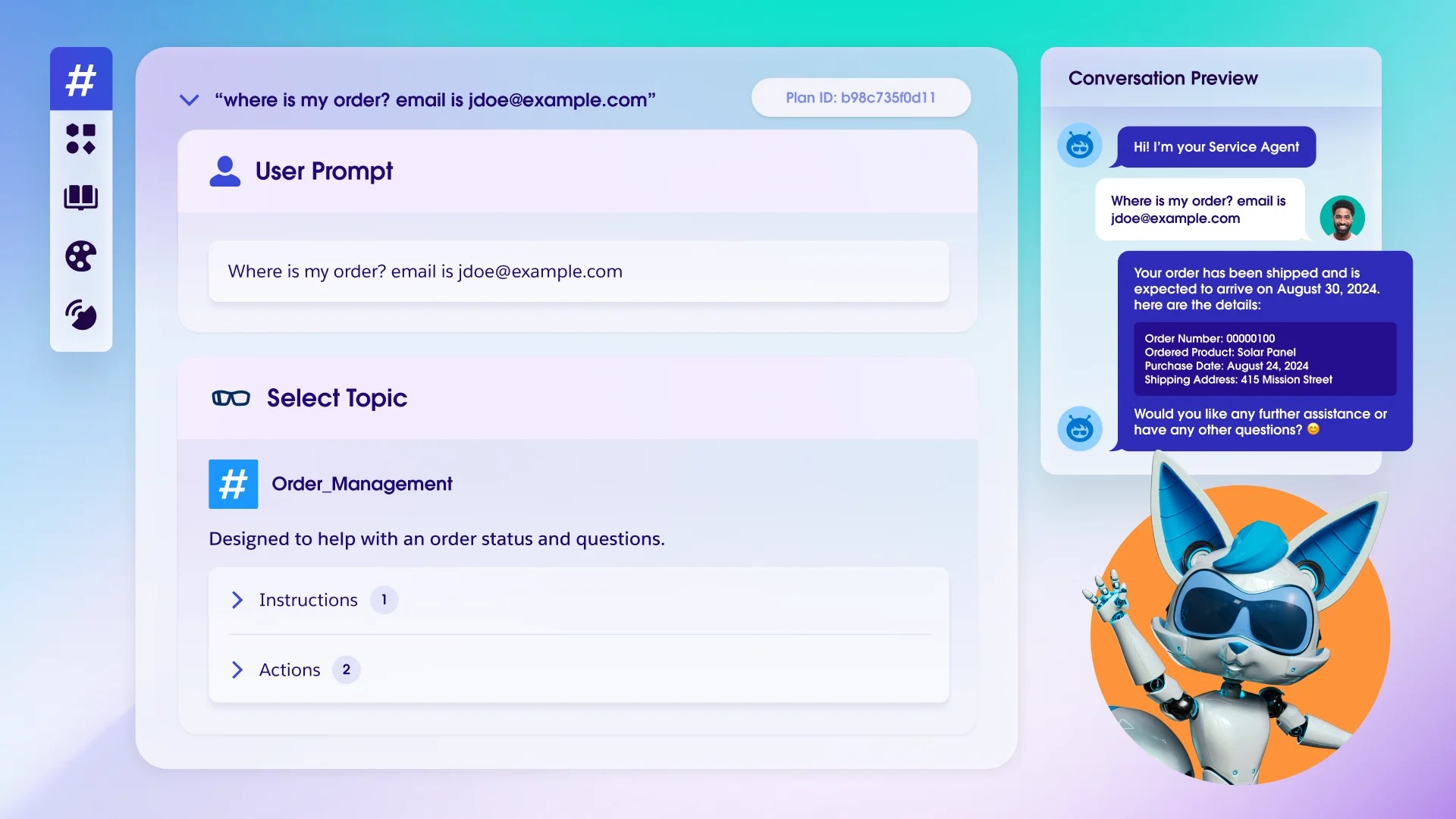

For example, I recently supported Salesforce’s Einstein Bots modularity effort with teams in Service and Commerce Cloud. The feature aims to create out-of-the-box functionality for Einstein Bots either as a fully fledged bot or as an addition to an existing bot with only some adjustments. Because each block or template could be used by customers of any industry for bots of any type, we had to decide between a specific persona or a blank slate. We chose the latter. While bot admins can tailor their bot dialog, we decided to keep our conversational patterns sufficiently broad. We included universal traits, like enthusiasm, that people prefer in service experiences.

3. Remember that language and style influence meaning

But deciding a bot should be enthusiastic is different from designing an enthusiastic bot. How does enthusiasm appear in sentences, especially if a bot is purely chat-focused with no voice component?

Whether we obsess over or brush off language choices when writing short messages or lengthier paragraphs, we practice language. The language and style guidelines will help designers understand commonly overlooked aspects of language, such as discourse markers (“oh”, “so”, or “well”) and how they influence how we interpret meaning.

With the bots modularity effort, we used punctuation and concise wording to convey enthusiasm. Think exclamation points, frequent “you” (second person) references, and using sentence fragments to indicate next steps or solicit information from the user.

This technique of centering language can also help answer another big question related to AI: With generative AI, how can you guarantee language consistency?

Truthfully, there is no 100% guarantee of consistency. Because of the generative nature of LLMs and how they process each prompt separately, even the same prompt may result in a new, unique generation. But, writing your own sample outputs will help you revise a prompt to more closely match expectations.

Content or conversation guidelines might limit you from using certain words, such as “sorry,” or prefer you to write out numbers such as “nine” instead of using the numeral “9.” You can work on doing the same with LLMs, prompting it to avoid certain words or use certain grammatical or linguistic elements.

Discover Agentforce

Agentforce provides always-on support to employees or customers. Learn how Agentforce can help your company today.

4. Focus on accessibility and inclusion

We also want to consider accessibility. A user may be interacting with AI through any number of devices, channels or circumstances.

For those who use a screen reader, you might skip or limit the number of emojis in the conversational copy. Emojis are tough to parse for a screen reader. They can also be tough to parse for the individual. The emoji itself might not match the text completely, or there may be norms related to use of certain emojis that have evolved along with popular culture and slang.

Accessibility and inclusion also relate to localization and general word choice — do our words mean what we want them to? We have to consider that different languages have different cultural norms and practices that might influence sentence structures or tone. Words can have multiple meanings that could lead to unintended interpretations. When the reply says, “I was unable to add all items to your order” does that mean no items or some items?

To combat this, it’s best to keep language as simple as possible. Avoid jargon or technical language, making sure every user can understand the message without having to leave the conversation. Instead of saying “I was unable to add all items to your order” consider displaying all of the included products along with an error message.

Especially in the world of generative AI, designers need to remember the principles behind conversation design and design systems. Every bit of copy adds dimension to a conversational AI exchange with a customer or user, so the design matters.